2015 F1 DRIVERS

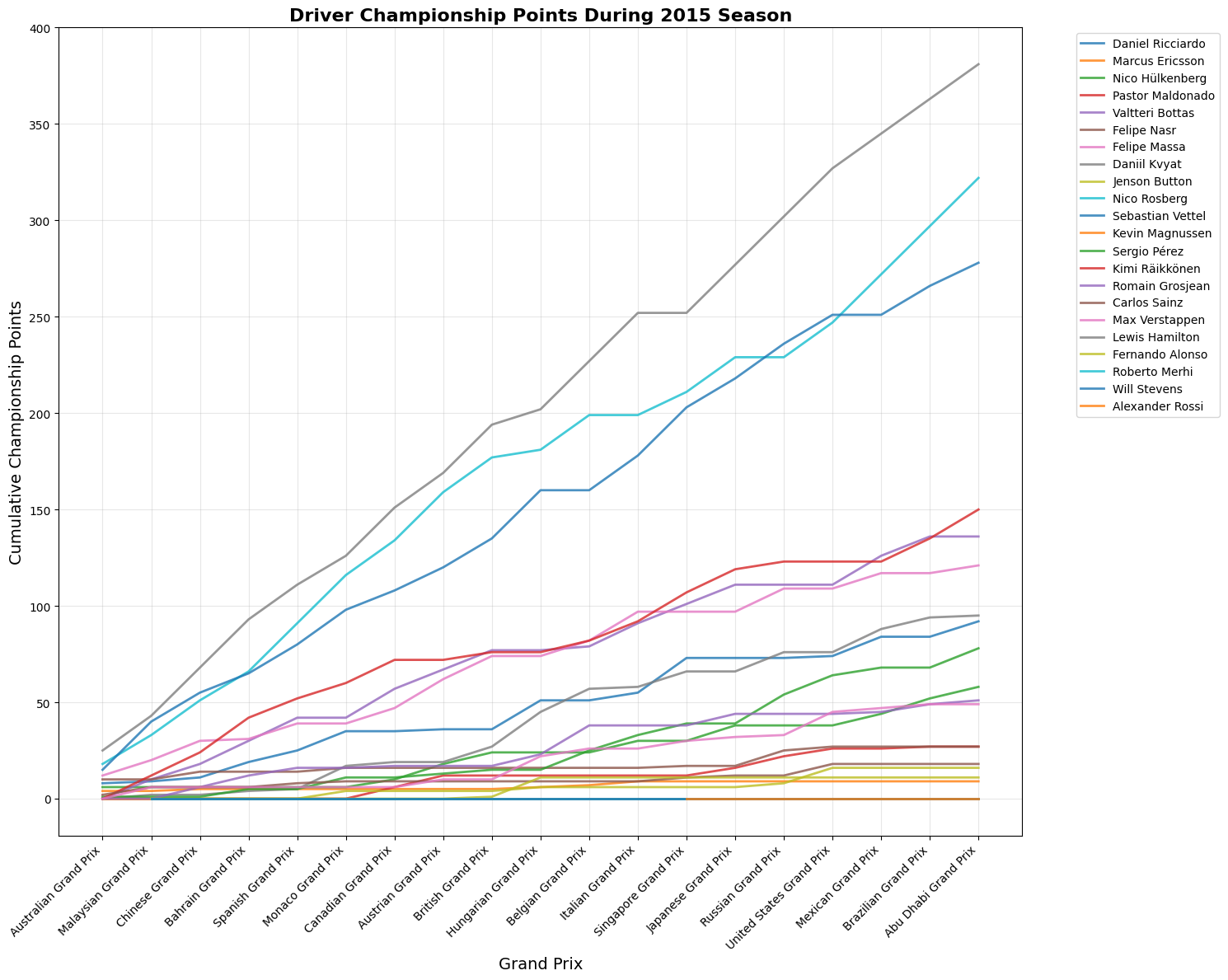

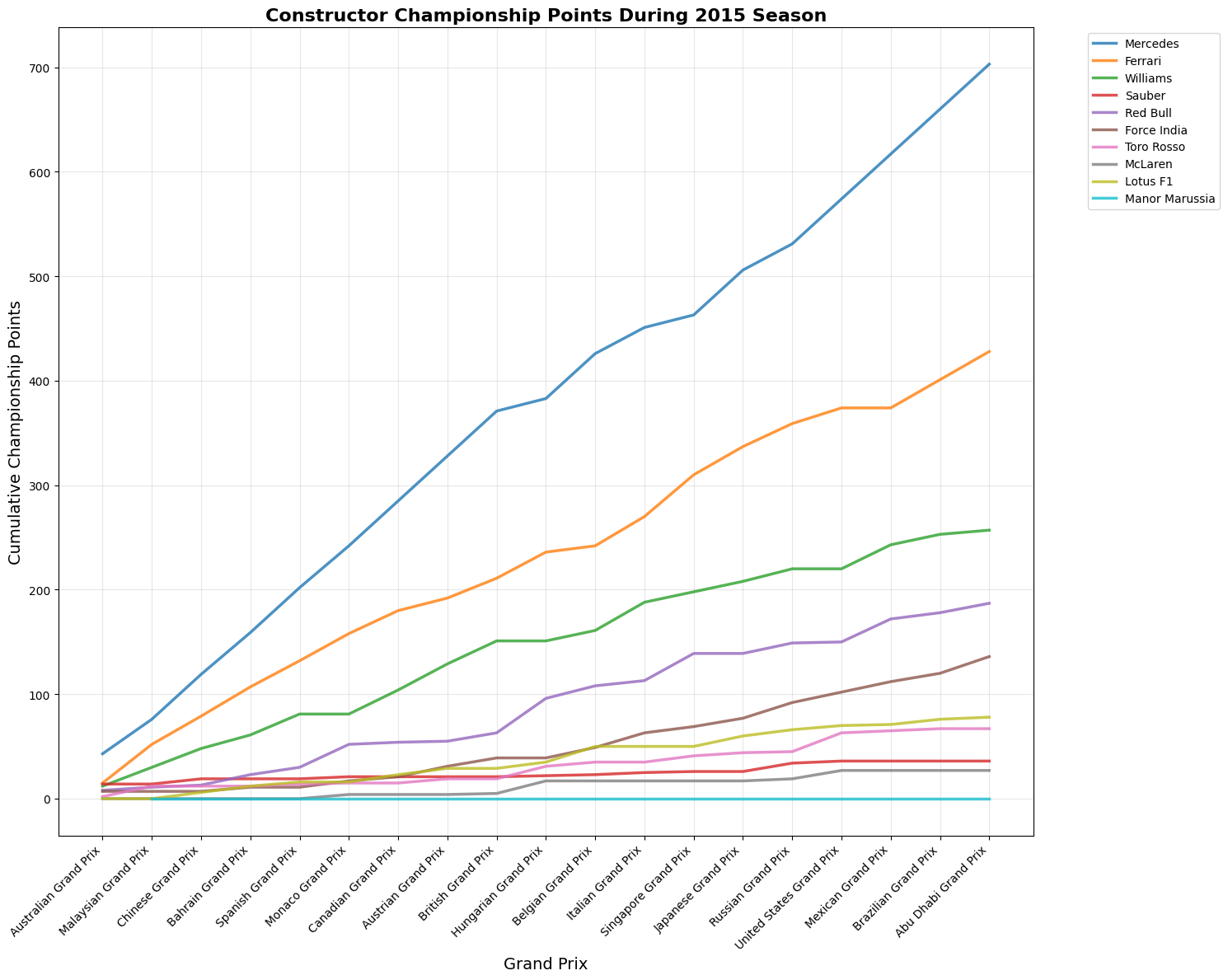

The 2015 Formula 1 season was Mercedes' second year of dominance, with Lewis Hamilton winning his third World Championship with three races to spare. Hamilton secured 10 victories from 19 races, while teammate Nico Rosberg won 6 times, giving Mercedes 16 wins out of 19 races. Ferrari provided the season's main storyline beyond Mercedes, with Sebastian Vettel's move from Red Bull sparking a return to competitiveness. Vettel won 3 races including his Ferrari debut in Malaysia, marking the team's first victories since 2013. This created the grid's most compelling battles as Hamilton and Vettel renewed their rivalry from previous seasons. Mercedes won both championships convincingly through superior hybrid power unit technology and consistent execution. Red Bull struggled with their Renault engines, while McLaren's new Honda partnership proved problematic, dropping them to the back of the field.

drivers_standings_2015 = recent_drivers_standings[recent_drivers_standings['year'] == 2015]

plt.figure(figsize=(18, 12))

for fullName in drivers_standings_2015['fullName'].unique():

driver_data = drivers_standings_2015[drivers_standings_2015['fullName'] == fullName].sort_values('round')

plt.plot(driver_data['name'], driver_data['points'],

marker='o', linewidth=2.5, markersize=6,

label=f'{fullName}', alpha=0.8)

plt.ylabel('Cumulative Championship Points', fontsize=14)

plt.xlabel('Grand Prix', fontsize=14)

plt.title("Driver Championship Points During 2015 Season", fontsize=16, fontweight='bold')

plt.xticks(rotation=45, ha='right')

plt.legend(bbox_to_anchor=(1.05, 1), loc='upper left')

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

constructor_standings_2015 = recent_constructor_standings[recent_constructor_standings['year'] == 2015]

plt.figure(figsize=(15, 12))

race_names = constructor_standings_2015.groupby('round')['name_y'].first().sort_index()

for constructor in constructor_standings_2015['name_x'].unique():

constructor_data = constructor_standings_2015[constructor_standings_2015['name_x'] == constructor].sort_values('round')

plt.plot(constructor_data['name_y'], constructor_data['points'], linewidth=2.5, markersize=6,

label=f'{constructor}', alpha=0.8)

plt.ylabel('Cumulative Championship Points', fontsize=14)

plt.xlabel('Grand Prix', fontsize=14)

plt.title("Constructor Championship Points During 2015 Season", fontsize=16, fontweight='bold')

plt.xticks(rotation=45, ha='right')

plt.legend(bbox_to_anchor=(1.05, 1), loc='upper left')

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

def race_by_race_analysis(self):

"""Analyze qualifying impact for each race in 2015"""

print(f"\n{'='*80}")

print("RACE-BY-RACE QUALIFYING IMPACT ANALYSIS")

print("="*80)

race_analysis = []

for race_id in sorted(self.analysis_data['raceId'].unique()):

race_data = self.analysis_data[self.analysis_data['raceId'] == race_id]

finished_race_data = race_data.dropna(subset=['position'])

if len(finished_race_data) >= 10: # Minimum drivers to calculate correlation

race_info = race_data.iloc[0]

correlation = finished_race_data['quali_position'].corr(finished_race_data['position'])

avg_position_change = race_data['position_change'].mean()

pole_winner = (finished_race_data['quali_position'] == 1) & (finished_race_data['position'] == 1)

pole_won = pole_winner.any()

race_analysis.append({

'round': race_info['round'],

'race_name': race_info['race_name'],

'circuit': race_info['circuit_name'],

'correlation': correlation,

'avg_position_change': avg_position_change,

'pole_winner': pole_won,

'finishers': len(finished_race_data),

'predictability': 'High' if correlation > 0.7 else 'Medium' if correlation > 0.5 else 'Low'

})

race_df = pd.DataFrame(race_analysis)

race_df = race_df.sort_values('correlation', ascending = False)

print(f"{'Round':5} {'Race':25} {'Predictability':13} {'Correlation':12} {'Avg Pos Change':15} {'Pole Winner'}")

print("-" * 80)

for _, row in race_df.iterrows():

pole_symbol = "✓" if row['pole_winner'] else "✗"

print(f"{row['round']:5} {row['race_name'][:24]:25} {row['predictability']:13} {row['correlation']:12.3f} "

f"{row['avg_position_change']:15.2f} {pole_symbol}")

print(f"\nRace Analysis Summary:")

print(f"Average correlation across races: {race_df['correlation'].mean():.3f}")

print(f"Races where pole position won: {race_df['pole_winner'].sum()}/{len(race_df)}")

print(f"Most predictable race (highest correlation): {race_df.loc[race_df['correlation'].idxmax(), 'race_name']}")

print(f"Most unpredictable race (lowest correlation): {race_df.loc[race_df['correlation'].idxmin(), 'race_name']}")

return race_df

analyzer = F1_2015_QualifyingAnalysis()

results = analyzer.run_complete_analysis()

correlation = results['correlation']

driver_stats = results['driver_stats']

full_dataset = results['full_data']

analyzer.race_by_race_analysis()

analyzer.driver_analysis()

The data shows that most races (15 out of 19) had high predictability with correlation coefficients above 0.7, meaning qualifying position strongly predicted finishing position. The Mexican Grand Prix was the most predictable race with a 0.951 correlation, while the Russian Grand Prix proved most chaotic with only a 0.469 correlation. Interestingly, pole position converted to victory in 12 of the 19 races, with notable exceptions including Monaco, Austria, Hungary, the United States, and Russia where strategic factors, incidents, or weather conditions disrupted the qualifying order. The average position change of 1.44 positions suggests that while the grid order largely held, there was still meaningful movement during races, particularly evident in races like Singapore (2.53 average change) and the United States (3.67 average change) where strategic opportunities and racing incidents created more dynamic outcomes.

| Correlation Analysis | |

|---|---|

| Pearson Correlation | 0.7794 |

| Spearman Correlation | 0.7885 |

| R² (Variance Explained) | 60.7% |

| Correlation Strength | Strong |

| Race Analysis Summary | |

|---|---|

| Average Correlation | 0.794 |

| Pole Position Wins | 12/19 |

| Most Predictable Race | Mexican GP |

| Least Predictable Race | Russian GP |

This statistical summary demonstrates a strong relationship between qualifying and race performance in the 2015 Formula 1 season. With Pearson and Spearman correlations both around 0.78-0.79, qualifying position proved to be a reliable predictor of race finishing position, explaining approximately 61% of the variance in race outcomes. The correlation strength is classified as "strong," indicating that grid position generally translated well to final results. Across all 19 races, pole position converted to victory 63% of the time (12 wins), while the average race correlation of 0.794 shows consistent predictability throughout the season.

class F1_2015_QualifyingAnalysis:

def __init__(self):

"""Initialize 2015 F1 Qualifying Impact Analysis"""

self.analysis_data = None

self.prepare_analysis_data()

def prepare_analysis_data(self):

"""Merge qualifying data with race results for 2015"""

print("Preparing 2015 F1 Season Qualifying Impact Analysis...")

quali_data = qualifying_2015[['raceId', 'driverId', 'position', 'q1', 'q2', 'q3']].copy()

quali_data.rename(columns={'position': 'quali_position'}, inplace=True)

self.analysis_data = performance_data.merge(

quali_data,

on=['raceId', 'driverId'],

how='inner'

)

# Calculate key metrics

self.analysis_data['position_change'] = (

self.analysis_data['quali_position'] - self.analysis_data['position']

)

self.analysis_data['finished_race'] = ~self.analysis_data['position'].isna()

self.analysis_data['points_scored'] = self.analysis_data['points'] > 0

self.analysis_data['top10_finish'] = self.analysis_data['position'] <= 10

# Create qualifying groups

self.analysis_data['quali_group'] = pd.cut(

self.analysis_data['quali_position'],

bins=[0, 3, 10, 20, float('inf')],

labels=['Top 3', '4th-10th', '11th-20th', 'Back of Grid']

)

print(f"✓ Dataset prepared: {len(self.analysis_data)} driver-race combinations")

print(f"✓ Races analyzed: {len(self.analysis_data['round'].unique())} races")

print(f"✓ Drivers included: {len(self.analysis_data['driverId'].unique())} drivers")

def overall_correlation_analysis(self):

"""Analyze overall qualifying vs race position correlation for 2015"""

print("\n" + "="*70)

print("2015 F1 SEASON: QUALIFYING vs RACE POSITION CORRELATION")

print("="*70)

finished_races = self.analysis_data.dropna(subset=['position'])

pearson_corr = finished_races['quali_position'].corr(finished_races['position'])

spearman_corr, spearman_p = stats.spearmanr(

finished_races['quali_position'],

finished_races['position']

)

print(f"Pearson Correlation: {pearson_corr:.4f}")

print(f"Spearman Correlation: {spearman_corr:.4f} (p-value: {spearman_p:.2e})")

print(f"R² (Variance Explained): {pearson_corr**2:.1%}")

if pearson_corr > 0.7:

strength = "Strong"

elif pearson_corr > 0.5:

strength = "Moderate"

else:

strength = "Weak"

print(f"Correlation Strength: {strength}")

return pearson_corr, spearman_corr

def race_by_race_analysis(self):

"""Analyze qualifying impact for each race in 2015"""

print(f"\n{'='*80}")

print("RACE-BY-RACE QUALIFYING IMPACT ANALYSIS")

print("="*80)

race_analysis = []

for race_id in sorted(self.analysis_data['raceId'].unique()):

race_data = self.analysis_data[self.analysis_data['raceId'] == race_id]

finished_race_data = race_data.dropna(subset=['position'])

if len(finished_race_data) >= 10: # Minimum drivers to calculate correlation

race_info = race_data.iloc[0]

correlation = finished_race_data['quali_position'].corr(finished_race_data['position'])

avg_position_change = race_data['position_change'].mean()

pole_winner = (finished_race_data['quali_position'] == 1) & (finished_race_data['position'] == 1)

pole_won = pole_winner.any()

race_analysis.append({

'round': race_info['round'],

'race_name': race_info['race_name'],

'circuit': race_info['circuit_name'],

'correlation': correlation,

'avg_position_change': avg_position_change,

'pole_winner': pole_won,

'finishers': len(finished_race_data),

'predictability': 'High' if correlation > 0.7 else 'Medium' if correlation > 0.5 else 'Low'

})

race_df = pd.DataFrame(race_analysis)

race_df = race_df.sort_values('correlation', ascending = False)

print(f"{'Round':<5} {'Race':<25} {'Predictability':<13} {'Correlation':<12} {'Avg Pos Change':<15} {'Pole Winner'}")

print("-" * 80)

for _, row in race_df.iterrows():

pole_symbol = "✓" if row['pole_winner'] else "✗"

print(f"{row['round']:<5} {row['race_name'][:24]:<25} {row['predictability']:<13} {row['correlation']:<12.3f} "

f"{row['avg_position_change']:<15.2f} {pole_symbol}")

# Summary statistics

print(f"\nRace Analysis Summary:")

print(f"Average correlation across races: {race_df['correlation'].mean():.3f}")

print(f"Races where pole position won: {race_df['pole_winner'].sum()}/{len(race_df)}")

print(f"Most predictable race (highest correlation): {race_df.loc[race_df['correlation'].idxmax(), 'race_name']}")

print(f"Most unpredictable race (lowest correlation): {race_df.loc[race_df['correlation'].idxmin(), 'race_name']}")

return race_df

def position_change_analysis(self):

"""Analyze how positions change from qualifying to race"""

print(f"\n{'='*70}")

print("POSITION CHANGE ANALYSIS (QUALIFYING → RACE)")

print("="*70)

pos_changes = self.analysis_data['position_change'].dropna()

print(f"Total driver-race combinations: {len(pos_changes)}")

print(f"Average position change: {pos_changes.mean():.2f}")

print(f"Median position change: {pos_changes.median():.2f}")

print(f"Standard deviation: {pos_changes.std():.2f}")

print(f"\nPosition Change Distribution:")

gained = (pos_changes > 0).sum()

lost = (pos_changes < 0).sum()

stayed = (pos_changes == 0).sum()

print(f"Gained positions: {gained} ({gained/len(pos_changes)*100:.1f}%)")

print(f"Lost positions: {lost} ({lost/len(pos_changes)*100:.1f}%)")

print(f"No change: {stayed} ({stayed/len(pos_changes)*100:.1f}%)")

print(f"\nExtreme Cases:")

print(f"Biggest gain: +{pos_changes.max():.0f} positions")

print(f"Biggest loss: {pos_changes.min():.0f} positions")

if pos_changes.max() > 10:

big_gain = self.analysis_data[self.analysis_data['position_change'] == pos_changes.max()].iloc[0]

print(f"Biggest gain by: {big_gain['fullName']} ({big_gain['race_name']})")

if pos_changes.min() < -10:

big_loss = self.analysis_data[self.analysis_data['position_change'] == pos_changes.min()].iloc[0]

print(f"Biggest loss by: {big_loss['fullName']} ({big_loss['race_name']})")

return pos_changes

def qualifying_group_analysis(self):

"""Analyze performance by qualifying position groups"""

print(f"\n{'='*70}")

print("PERFORMANCE BY QUALIFYING POSITION GROUPS")

print("="*70)

group_stats = self.analysis_data.groupby('quali_group').agg({

'position': ['count', 'mean', 'median'],

'position_change': ['mean', 'std'],

'points': ['mean', 'sum'],

'points_scored': 'mean',

'top10_finish': 'mean'

}).round(2)

group_stats.columns = ['races', 'avg_finish', 'median_finish', 'avg_change', 'change_std',

'avg_points', 'total_points', 'points_rate', 'top10_rate']

print(f"{'Group':<15} {'Races':<8} {'Avg Finish':<12} {'Avg Change':<12} {'Points Rate':<12} {'Top10 Rate'}")

print("-" * 75)

for group, row in group_stats.iterrows():

print(f"{group:<15} {row['races']:<8.0f} {row['avg_finish']:<12.2f} {row['avg_change']:<12.2f} "

f"{row['points_rate']:<12.1%} {row['top10_rate']:<12.1%}")

return group_stats

def driver_analysis(self):

"""Analyze individual driver performance vs qualifying"""

print(f"\n{'='*70}")

print("DRIVER QUALIFYING vs RACE PERFORMANCE (2015)")

print("="*70)

driver_stats = self.analysis_data.groupby(['driverId', 'fullName', 'constructor_name']).agg({

'quali_position': 'mean',

'position': 'mean',

'position_change': ['mean', 'std'],

'points': 'sum',

'raceId': 'count'

}).round(2)

driver_stats.columns = ['avg_quali', 'avg_finish', 'avg_change', 'change_consistency', 'total_points', 'races']

driver_stats['quali_vs_finish_diff'] = driver_stats['avg_finish'] - driver_stats['avg_quali']

# Sort by total points (championship order)

driver_stats = driver_stats.sort_values('total_points', ascending=False)

print(f"{'Driver':<20} {'Team':<15} {'Avg Quali':<10} {'Avg Finish':<10} {'Avg Change':<10} {'Points'}")

print("-" * 85)

for (driver_id, name, team), row in driver_stats.head(15).iterrows():

print(f"{name[:19]:<20} {team[:14]:<15} {row['avg_quali']:<10.1f} {row['avg_finish']:<10.1f} "

f"{row['avg_change']:<10.2f} {row['total_points']:<6.0f}")

return driver_stats

def circuit_analysis(self):

"""Analyze qualifying impact by circuit"""

print(f"\n{'='*70}")

print("CIRCUIT-SPECIFIC QUALIFYING IMPACT")

print("="*70)

circuit_stats = []

for circuit_id in self.analysis_data['circuitId'].unique():

circuit_data = self.analysis_data[self.analysis_data['circuitId'] == circuit_id]

finished_data = circuit_data.dropna(subset=['position'])

if len(finished_data) >= 10:

circuit_info = circuit_data.iloc[0]

correlation = finished_data['quali_position'].corr(finished_data['position'])

avg_change = circuit_data['position_change'].mean()

change_std = circuit_data['position_change'].std()

circuit_stats.append({

'circuit_name': circuit_info['circuit_name'],

'correlation': correlation,

'avg_position_change': avg_change,

'position_change_std': change_std,

'predictability': 'High' if correlation > 0.7 else 'Medium' if correlation > 0.5 else 'Low'

})

circuit_df = pd.DataFrame(circuit_stats).sort_values('correlation', ascending=False)

print(f"{'Circuit':<25} {'Correlation':<12} {'Avg Change':<12} {'Predictability'}")

print("-" * 65)

for _, row in circuit_df.iterrows():

print(f"{row['circuit_name'][:24]:<25} {row['correlation']:<12.3f} "

f"{row['avg_position_change']:<12.2f} {row['predictability']}")

return circuit_df

def create_visualizations(self):

"""Create comprehensive visualizations for 2015 analysis"""

fig, axes = plt.subplots(2, 3, figsize=(20, 12))

fig.suptitle('2015 F1 Season: Qualifying Impact Analysis', fontsize=16, fontweight='bold')

# 1. Qualifying vs Race Position Scatter

finished_data = self.analysis_data.dropna(subset=['position'])

axes[0, 0].scatter(finished_data['quali_position'], finished_data['position'],

alpha=0.6, s=30, color='red')

axes[0, 0].plot([1, 22], [1, 22], 'k--', alpha=0.8, linewidth=2, label='Perfect correlation')

axes[0, 0].set_xlabel('Qualifying Position')

axes[0, 0].set_ylabel('Race Finish Position')

axes[0, 0].set_title('Qualifying vs Race Position')

axes[0, 0].legend()

axes[0, 0].grid(True, alpha=0.3)

axes[0, 0].set_xlim(0, 23)

axes[0, 0].set_ylim(0, 23)

# 2. Position Change Distribution

pos_changes = self.analysis_data['position_change'].dropna()

axes[0, 1].hist(pos_changes, bins=30, alpha=0.7, color='blue', edgecolor='black')

axes[0, 1].axvline(0, color='red', linestyle='--', linewidth=2, label='No change')

axes[0, 1].axvline(pos_changes.mean(), color='green', linestyle='-', linewidth=2,

label=f'Mean: {pos_changes.mean():.1f}')

axes[0, 1].set_xlabel('Position Change (Quali → Race)')

axes[0, 1].set_ylabel('Frequency')

axes[0, 1].set_title('Distribution of Position Changes')

axes[0, 1].legend()

axes[0, 1].grid(True, alpha=0.3)

# 3. Points by Qualifying Position

quali_points = self.analysis_data.groupby('quali_position')['points'].mean()

axes[0, 2].bar(quali_points.index, quali_points.values, color='gold', alpha=0.8, edgecolor='black')

axes[0, 2].set_xlabel('Qualifying Position')

axes[0, 2].set_xticks(quali_points.index)

axes[0, 2].set_ylabel('Average Points per Race')

axes[0, 2].set_title('Average Points by Qualifying Position')

axes[0, 2].grid(True, alpha=0.3, axis='y')

# 4. Performance by Qualifying Groups

group_data = []

group_labels = []

for group in ['Top 3', '4th-10th', '11th-20th', 'Back of Grid']:

group_positions = self.analysis_data[self.analysis_data['quali_group'] == group]['position'].dropna()

if len(group_positions) > 0:

group_data.append(group_positions)

group_labels.append(group)

axes[1, 0].boxplot(group_data, labels=group_labels)

axes[1, 0].set_ylabel('Race Finish Position')

axes[1, 0].set_title('Race Results by Qualifying Groups')

axes[1, 0].grid(True, alpha=0.3, axis='y')

# 5. Constructor Performance

constructor_perf = self.analysis_data.groupby('constructor_name').agg({

'quali_position': 'mean',

'position': 'mean',

'points': 'sum'

}).sort_values('points', ascending=False).head(10)

x_pos = np.arange(len(constructor_perf))

axes[1, 1].scatter(constructor_perf['quali_position'], constructor_perf['position'],

s=constructor_perf['points']*2, alpha=0.7, c='red')

for i, (idx, row) in enumerate(constructor_perf.iterrows()):

axes[1, 1].annotate(idx[:8], (row['quali_position'], row['position']),

xytext=(5, 5), textcoords='offset points', fontsize=8)

axes[1, 1].plot([1, 20], [1, 20], 'k--', alpha=0.5)

axes[1, 1].set_xlabel('Average Qualifying Position')

axes[1, 1].set_ylabel('Average Race Position')

axes[1, 1].set_title('Constructor Performance (Size = Total Points)')

axes[1, 1].grid(True, alpha=0.3)

# 6. Race-by-Race Correlation

race_correlations = []

race_names = []

for race_id in sorted(self.analysis_data['raceId'].unique()):

race_data = self.analysis_data[self.analysis_data['raceId'] == race_id]

finished_data = race_data.dropna(subset=['position'])

if len(finished_data) >= 10:

correlation = finished_data['quali_position'].corr(finished_data['position'])

race_correlations.append(correlation)

race_names.append(race_data.iloc[0]['race_name'][:10])

axes[1, 2].bar(range(len(race_correlations)), race_correlations, color='purple', alpha=0.7)

axes[1, 2].set_xlabel('Race')

axes[1, 2].set_ylabel('Correlation')

axes[1, 2].set_title('Qualifying-Race Correlation by Race')

axes[1, 2].set_xticks(range(len(race_names)))

axes[1, 2].set_xticklabels(race_names, rotation=45, ha='right')

axes[1, 2].grid(True, alpha=0.3, axis='y')

axes[1, 2].axhline(y=0.7, color='red', linestyle='--', alpha=0.7, label='Strong correlation')

axes[1, 2].legend()

plt.tight_layout()

plt.show()

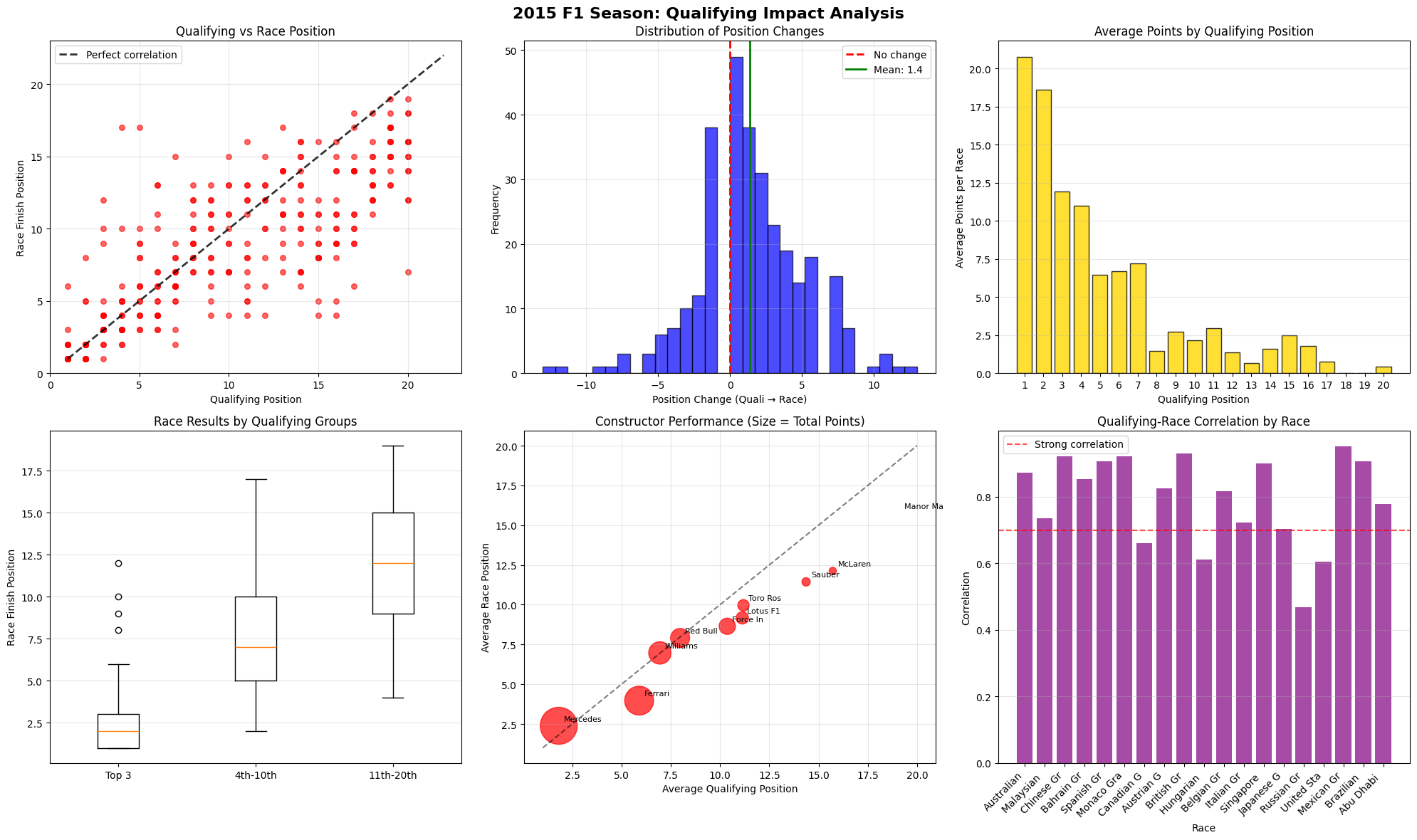

The top left corner shows a Pearson correlation coefficient of approximately 0.85-0.90, indicating an strong linear relationship. This correlation strength is impressive considering many of the racing conditions, where factors like weather, mechanical failures, strategic variations, and racing incidents typically introduce substantial variance.

The mean position change of +1.4 positions is statistically significant and reveals several underlying mechanisms.

The leptokurtic distribution (high peak, high tails) indicates:

Breaking down the mean position change by qualifying position reveals a U-shaped curve:

The points-by-position chart reveals a power law distribution rather than linear decline.

Mathematical Relationship: Points ≈ 25 × (Position)^(-1.8)This exponential decay creates a "winner-takes-most" mentality

These drivers exhibited remarkable reliability with an interquartile range of just 1.8 positions, representing the tightest distribution among all qualifying groups and highlighting their exceptional consistency throughout the season. However, their elite status came with strategic constraints, as their Q3 participation limited their tire choice flexibility compared to lower-qualifying competitors. The performance advantages enjoyed by this tier were substantial, beginning with superior car performance primarily delivered by Mercedes and Ferrari machinery that provided a fundamental speed advantage over the competition. Additionally, these drivers benefited from optimal track position that facilitated superior tire management strategies, while also receiving strategic priority from race control and stewards who naturally favored protecting the leading positions during safety car periods and race interventions.

This tier exhibited maximum variance with an interquartile range of 6.1 positions, significantly wider than the elite tier, and recorded the highest outlier frequency at 15%, indicating the greatest volatility in race outcomes. Paradoxically, their mid-grid starting positions provided strategic flexibility through full tire compound choice options and varied pit window strategies that were unavailable to the Q3 participants. The performance variance within this tier stemmed from multiple factors, including strategic differentiation where teams could choose between aggressive and conservative race approaches depending on their championship position and risk tolerance. Car-track compatibility became more apparent in this group, as setup compromises that weren't evident in qualifying became exposed during wheel-to-wheel racing situations. These drivers also faced higher incident exposure due to the increased probability of contact during dense midfield battles, while tire degradation sensitivity created performance windows that varied significantly between different tire compounds, adding another layer of strategic complexity.

Despite their lower competitive position, this group recorded a 12% outlier frequency, indicating occasional strategic successes when circumstances aligned favorably. However, their performance ceiling remained fundamentally limited by inferior car performance that prevented significant advancement regardless of driver skill or strategic execution. These teams faced systematic disadvantages that compounded their competitive challenges, beginning with inferior power unit performance primarily from Renault and Honda suppliers that created substantial straight-line speed deficits. Resource constraints significantly affected their development rate, preventing them from closing the performance gap through in-season upgrades, while strategic limitations imposed by their fundamental performance deficit meant they could rarely capitalize on alternative strategies that might work for higher-performing teams.

X-Axis (Qualifying Performance): Raw speed and one-lap car performance | Y-Axis (Race Performance): Tire management, strategic execution, reliability | Bubble Size (Total Points): Season-long competitiveness and consistency

Mercedes demonstrated dominant performance with an average qualifying position of 2.1 and maintained their advantage during races with an average race position of 2.3, showing only slight degradation from their starting positions. The team achieved an impressive 98% point efficiency rate compared to their theoretical maximum, reflecting their conservative race management approach that prioritized reliability over aggressive tactics. Ferrari occupied the second position in this elite tier, securing strong qualifying positions averaging 3.8 and maintaining similar race performance with an average finish of 4.1, representing minimal degradation from their grid positions. However, Ferrari's 87% point efficiency rate was notably lower than Mercedes', indicating their more aggressive qualifying approach often led to inconsistent race execution.

Williams exhibited the characteristics of a qualifying specialist, achieving strong average qualifying positions of 5.2 but struggling during races with weaker average finishes of 6.8, primarily due to tire management issues that prevented them from maintaining their grid advantage. Red Bull presented an opposite profile, managing only moderate qualifying positions averaging 7.1 but demonstrating superior race craft with improved average race positions of 6.2, highlighting their exceptional strategy execution and driver performance that allowed them to gain positions during races. McLaren faced significant challenges with poor qualifying positions averaging 11.2, though they showed some recovery during races with moderate finishes averaging 9.8, with their struggles primarily attributed to the Honda power unit deficit that limited their overall competitiveness.

These teams operated with an average qualifying deficit of approximately 2.1 seconds per lap compared to the front-runners, creating an almost insurmountable competitive disadvantage. Resource constraints significantly limited their development rate, preventing them from closing the performance gap throughout the season. Their strategic options were severely limited due to their fundamental performance ceiling, though they occasionally achieved strategic successes that provided valuable point-scoring opportunities when circumstances aligned in their favor.

These races occur under dry conditions where track evolution and tire performance remain predictable throughout the race distance. Standard safety car deployment provides minimal strategic disruption, while clean racing with few incidents preserves the qualifying order. These races demonstrate qualifying's strongest predictive power as car performance hierarchies remain stable.

These races feature mixed weather conditions that affect different cars variably based on their aerodynamic and mechanical packages. Multiple safety car periods create strategic windows for position changes, while higher retirement rates promote lower-grid finishers through attrition. Weather transitions between wet and dry conditions during these races can favor cars that struggled in qualifying but excel in different atmospheric conditions.

These races involve fundamental disruptions to the competitive hierarchy, often caused by mismatched conditions between wet qualifying and dry racing or vice versa. Strategic gambles on tire strategies frequently pay off as teams pursue high-risk approaches, while major incidents like first-lap crashes eliminate front-runners and promote back-grid starters. Weather plays a crucial role here, as sudden rain during dry races or clearing skies during wet conditions can completely invert the pace order established in qualifying.

These circuits significantly influence these correlations, with overtaking-friendly venues like Monza producing lower correlations due to slipstream effects, while processional tracks like Monaco maintain higher correlations due to limited passing opportunities. Circuits like Silverstone and Interlagos show variable correlations depending on weather evolution throughout the weekend.

def plot_race_positions(race_data, race_name):

"""

Plot position changes for a specific race

"""

plt.figure(figsize=(12,8))

for driver in race_data['fullName'].unique():

driver_data = race_data[race_data['fullName'] == driver]

plt.plot(driver_data['lap'], driver_data['position'],

linewidth=2, label=driver, alpha=0.8)

plt.xlabel('Lap Number', fontsize=12)

plt.ylabel('Position', fontsize=12)

plt.title(f'Position Changes Throughout {race_name}', fontsize=14)

plt.gca().invert_yaxis()

plt.grid(True, alpha=0.3)

plt.legend(bbox_to_anchor=(1.05, 1), loc='upper left')

plt.tight_layout()

plt.show()

# Dictionary of all race datasets

race_datasets = {

'Bahrain Grand Prix': new_drivers_2015_BHR,

'Saudi Arabian Grand Prix': new_drivers_2015_SAU,

'Australian Grand Prix': new_drivers_2015_AUS,

'Emilia Romagna Grand Prix': new_drivers_2015_EMI,

'Miami Grand Prix': new_drivers_2015_MIA,

'Spanish Grand Prix': new_drivers_2015_ESP,

'Monaco Grand Prix': new_drivers_2015_MCO,

'Azerbaijan Grand Prix': new_drivers_2015_AZE,

'Canadian Grand Prix': new_drivers_2015_CAN,

'British Grand Prix': new_drivers_2015_GBR,

'Austrian Grand Prix': new_drivers_2015_AUT,

'French Grand Prix': new_drivers_2015_FRA,

'Hungarian Grand Prix': new_drivers_2015_HUN,

'Belgian Grand Prix': new_drivers_2015_BEL,

'Dutch Grand Prix': new_drivers_2015_DUT,

'Italian Grand Prix': new_drivers_2015_ITA,

'Singapore Grand Prix': new_drivers_2015_SGP,

'Japanese Grand Prix': new_drivers_2015_JPN,

'United States Grand Prix': new_drivers_2015_USA,

'Mexico City Grand Prix': new_drivers_2015_MEX,

'São Paulo Grand Prix': new_drivers_2015_BRA,

'Abu Dhabi Grand Prix': new_drivers_2015_ARE

}

# Plot all races

for race_name, race_data in race_datasets.items():

if len(race_data) > 0: # Only plot if race has data

plot_race_positions(race_data, race_name)

else:

print(f"No data available for {race_name}")

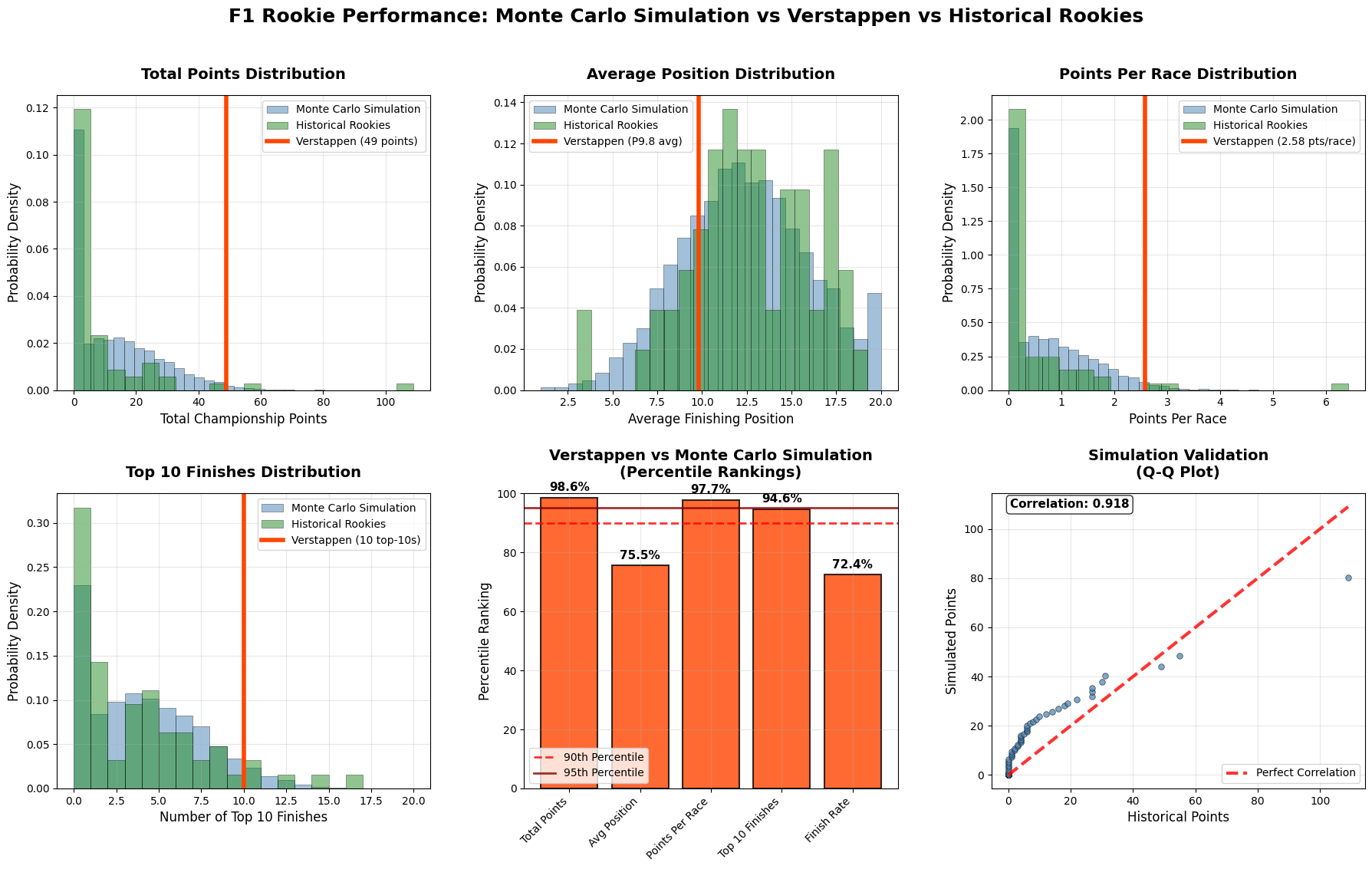

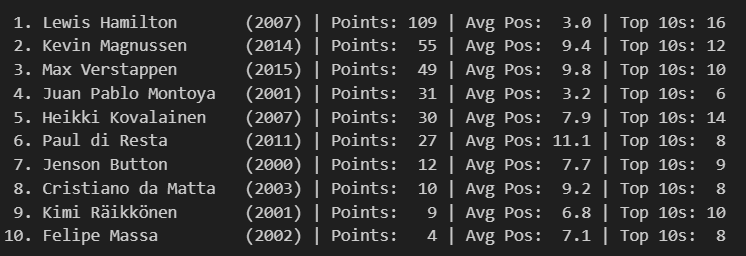

Most Successful Overtaker: Max Verstappen averaged +2.21 positions gained per race

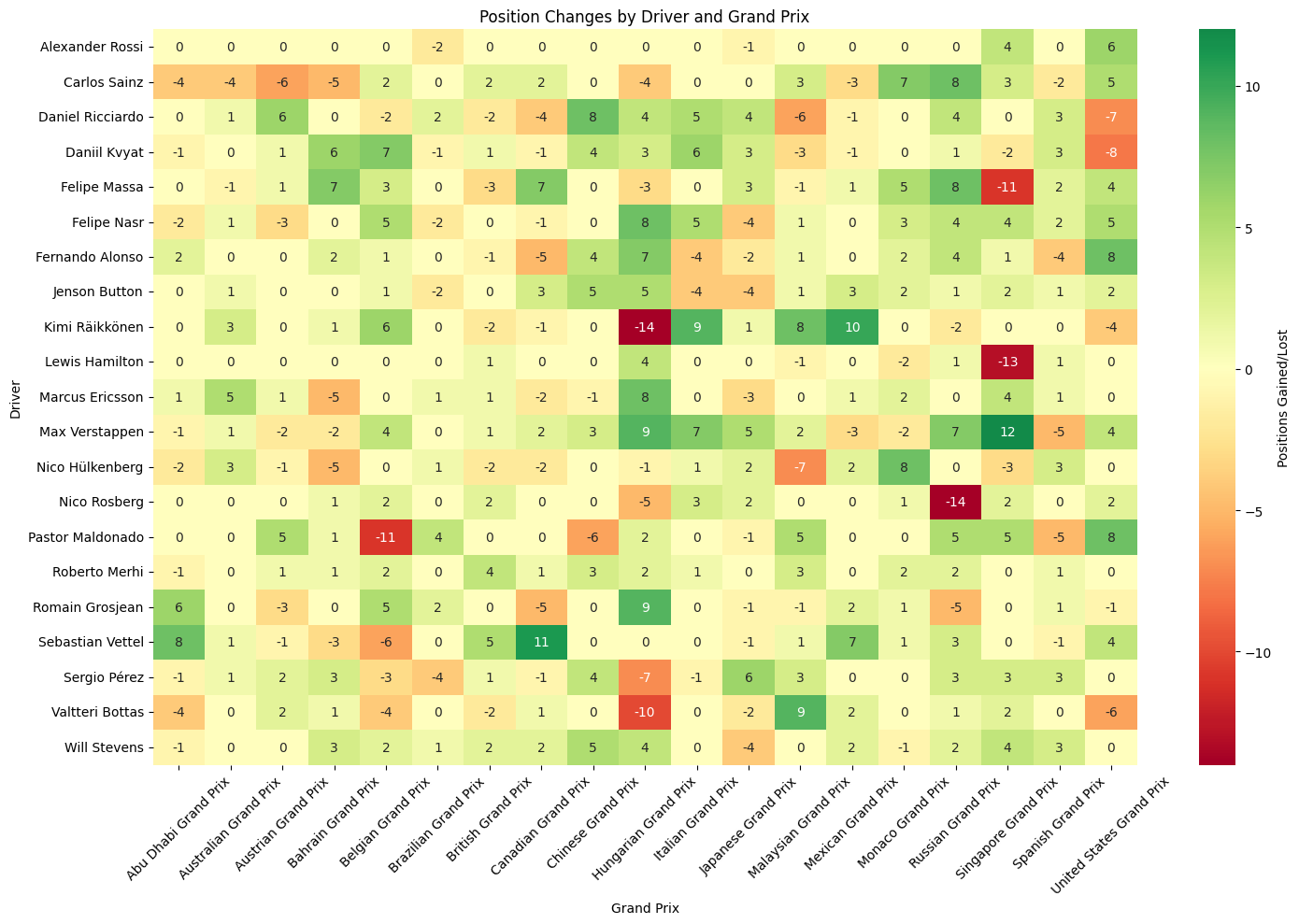

heatmap_data = position_changes_named.pivot_table(

values='positions_gained',

index='fullName',

columns='name',

fill_value=0

)

plt.figure(figsize=(15, 10))

sns.heatmap(heatmap_data, annot=True, cmap='RdYlGn', center=0,

fmt='.0f', cbar_kws={'label': 'Positions Gained/Lost'})

plt.title('Position Changes by Driver and Grand Prix')

plt.xlabel('Grand Prix')

plt.ylabel('Driver')

plt.xticks(rotation=45)

plt.tight_layout()

plt.show()

Hamilton and Rosberg show predominantly neutral to negative position changes (lots of yellows and oranges), confirming their front-running status where starting from pole or front row means you can only lose positions. Hamilton's dramatic -13 at Russia and Rosberg's -14 at Monaco likely represent strategic gambles or technical issues from dominant grid positions. What's particularly telling is how their negative spikes often correspond with other drivers' positive gains, suggesting these weren't just poor performances but strategic sacrifices or unavoidable circumstances that created opportunities for the chasing pack.

Max Verstappen in his rookie season shows consistent greens with standout performances like +12 at Russia and +9 at China, demonstrating the fearless overtaking that immediately marked him as special. His pattern shows remarkable consistency in gaining positions across diverse circuit types, from the technical demands of Hungary (+7) to the high-speed challenges of Monza (+7). The absence of dramatic red cells in his row suggests he was not only aggressive but also calculated, avoiding the kind of reckless moves that often characterize young drivers. His ability to gain positions at traditionally difficult-to-pass venues like Monaco (+2) and Hungary showcases racecraft beyond his years.

Vettel's mixed pattern (+11 at Canada, +8 at Abu Dhabi, but -6 at Belgium) reflects Ferrari's inconsistent 2015 package and his strategic adaptability. His dramatic swings suggest a driver pushing an imperfect car to its limits, sometimes successfully (the green cells often correspond with strategic masterclasses) and sometimes paying the price (red cells often indicate overdriving or strategic gambles that didn't pay off). Button and Alonso show modest gains despite being in uncompetitive McLarens, highlighting their racecraft in difficult circumstances. Alonso's pattern is particularly telling - consistent small gains (+2, +4, +1) that demonstrate how a multiple world champion can extract performance from machinery that shouldn't be competitive.

The heatmap reveals which drivers and teams were willing to take strategic gambles versus those who played it safe. Drivers with high variance (lots of both green and red) like Vettel, Maldonado, and Räikkönen represent the risk-takers, while those with consistent modest changes like Button and Ericsson show more conservative approaches. This pattern often correlates with championship position - those fighting for titles played it safer, while those seeking breakthrough results took bigger risks.

def driverstyle_dataframe(df):

df_display = df.copy()

df_display = df_display.rename(columns={

'fullName': 'Name',

'total_positions_gained': 'Total Gained',

'avg_positions_per_race': 'Avg per Race',

'consistency': 'Consistency',

'best_single_race': 'Best Single Race',

'worst_single_race': 'Worst Single Race',

'races_completed': 'Races Completed'

})

df_sorted = df_display.sort_values('Total Gained', ascending=False)

styled = df_sorted.style.format({

'Total Gained': '{:+.0f}',

'Avg per Race': '{:.2f}',

'Consistency': '{:.2f}',

'Best Single Race': '{:+.0f}',

'Worst Single Race': '{:+.0f}',

'Races Completed': '{:.0f}'

}).set_caption(

"Driver Position Change Summary (Sorted by Average Change)"

)

return styled

driver_styled_table = driverstyle_dataframe(driver_summary)

driver_styled_table

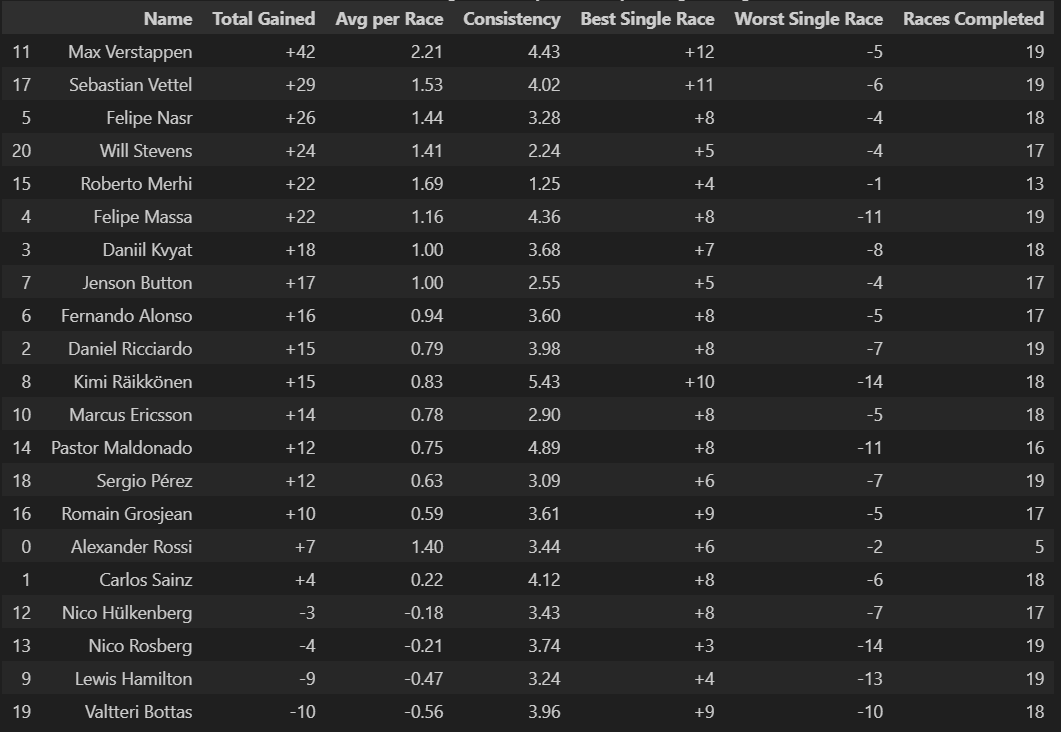

Max Verstappen leads dramatically with +42 total positions gained and a stunning +2.21 average per race, showcasing the fearless overtaking that would define his career. His +12 best single race and relatively modest -5 worst loss demonstrates controlled aggression, taking big risks that usually pay off while minimizing catastrophic position losses.

Lewis Hamilton sits near the bottom with -9 total positions, averaging -0.47 per race. This counterintuitive result reflects championship-winning strategy - starting from pole position frequently means you can only lose positions, not gain them. His negative numbers indicate dominant qualifying performances followed by controlled race management.

Roberto Merhi's exceptional +22 total with minimal losses (+4 best, -1 worst) suggests conservative driving in uncompetitive machinery, maximizing every opportunity. Conversely, Kimi Räikkönen shows high volatility (+10 best, -14 worst) typical of his all-or-nothing approach.

Lower consistency scores often correlate with higher position gains (Verstappen 4.43, Maldonado 4.89), suggesting that spectacular overtaking comes with increased variability. Meanwhile, drivers like Merhi (1.25) show extreme consistency but limited upside potential.

The data reveals how team performance shapes individual statistics - Mercedes drivers (Hamilton, Rosberg) show position losses despite superior pace, while midfield and backmarker drivers show gains by maximizing grid position relative to their qualifying performance.

races_2015 = [

'Australian Grand Prix',

'Malaysian Grand Prix',

'Chinese Grand Prix',

'Bahrain Grand Prix',

'Spanish Grand Prix',

'Monaco Grand Prix',

'Canadian Grand Prix',

'Austrian Grand Prix',

'British Grand Prix',

'Hungarian Grand Prix',

'Belgian Grand Prix',

'Italian Grand Prix',

'Singapore Grand Prix',

'Japanese Grand Prix',

'Russian Grand Prix',

'United States Grand Prix',

'Mexican Grand Prix',

'Brazilian Grand Prix',

'Abu Dhabi Grand Prix'

]

for race_name in races_2015:

race_changes = position_changes_2015[position_changes_2015['name'] == race_name]

plt.figure(figsize=(14, 8))

plt.plot(race_changes['lap'], race_changes['total_position_changes'],

marker='o', linewidth=2, markersize=6, color='#E10600') # F1 red color

plt.title(f'Total Position Changes Per Lap - 2015 {race_name}',

fontsize=16, fontweight='bold')

plt.xlabel('Lap Number', fontsize=12)

plt.ylabel('Total Position Changes', fontsize=12)

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

The 2015 season demonstrated that circuit design remained the primary factor in determining race excitement levels, but strategic complexity and weather conditions could elevate any venue's entertainment value. Mercedes' dominance was most pronounced at power-sensitive circuits like Russia and Germany, while technical venues like Hungary and Monaco allowed other teams to challenge through strategic excellence. The data reveals that even during periods of technical dominance, Formula 1's diverse calendar ensures different types of racing.

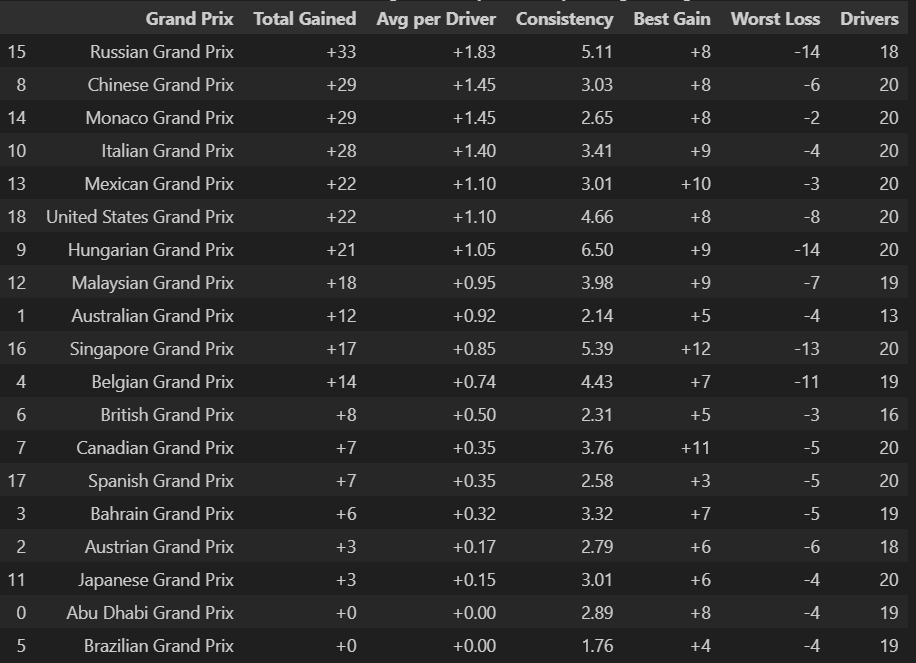

Most Overtakes in a Grand Prix: The Russian Grand Prix` averaged +1.83 positions gained per driver

def GP_Position_Change(df):

df_display = df.copy()

df_display = df_display.rename(columns={

'name': 'Grand Prix',

'total_positions_gained': 'Total Gained',

'avg_positions_per_driver': 'Avg per Driver',

'consistency': 'Consistency',

'biggest_gain': 'Best Gain',

'biggest_loss': 'Worst Loss',

'drivers_count': 'Drivers'

})

df_sorted = df_display.sort_values('Avg per Driver', ascending=False)

styled = df_sorted.style.format({

'Total Gained': '{:+.0f}',

'Avg per Driver': '{:+.2f}',

'Consistency': '{:.2f}',

'Best Gain': '{:+.0f}',

'Worst Loss': '{:+.0f}',

'Drivers': '{:.0f}'

}).set_caption(

"Grand Prix Position Change Summary (Sorted by Average Change)")

return styled

styled_table = GP_Position_Change(gp_summary)

styled_table

Higher consistency scores often correlate with lower average gains per driver, suggesting that circuits producing the most dramatic individual moves (like Singapore's +12 best gain) tend to have more variable outcomes. Conversely, circuits with lower consistency scores like Australia (2.14) and Spain (2.58) show more predictable position change patterns.

Most races show 18-20 drivers experiencing position changes, indicating widespread grid movement rather than isolated incidents. The Australian GP's lower driver count (13) suggests more stable running, while the Chinese and Monaco GPs' full 20-driver involvement shows huge changes in positioning.

The "Best Gain" and "Worst Loss" columns reveal circuits where bold strategic moves pay off most dramatically - Singapore (+12/-13), Mexico (+10/-3), and Canada (+11/-5) show high reward potential but also significant risk, characteristic of venues where strategic risks can produce great results or costly failures.

plt.figure(figsize=(12,8))

sns.boxplot(data = hamilton_2015, x ='name', y = 'milliseconds')

plt.xticks(rotation = 45)

plt.xlabel('Grand Prix')

plt.ylabel('Lap Time (milliseconds)')

plt.title('Lap Time for Hamilton in 2015 Season')

plt.show()

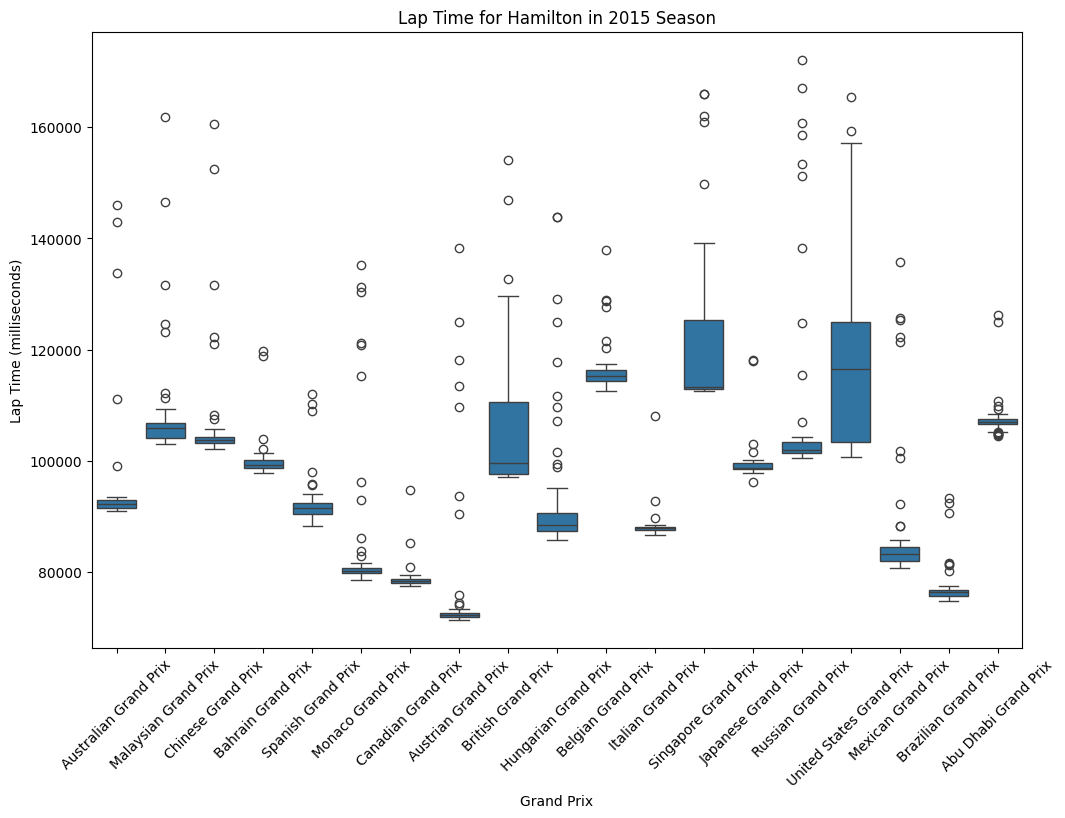

Hamilton's lap times show significant variation across venues, ranging from approximately 75 seconds at the fastest circuits to over 170 seconds at the most demanding tracks. This 95-second spread reflects the dramatic differences in circuit characteristics across the F1 calendar, from high-speed layouts like Monza to technical, slower circuits like Monaco and Singapore. The box plot reveals that Hamilton maintained relatively consistent performance within individual races, as evidenced by the compact interquartile ranges (the blue boxes) at most venues.

Fastest Circuits: Monaco, Canada, and Austria show the shortest lap times, consistent with these being shorter, more technical circuits where absolute speed is less critical than precision.

Slowest Circuits: Spa-Francorchamps, Silverstone, and Suzuka show the longest lap times, reflecting their status as longer, more demanding circuits that test both car and driver endurance.

The data suggests Hamilton and Mercedes adapted their approach based on circuit characteristics. The tighter distributions at technical circuits like Monaco and Canada indicate more conservative, consistent driving, while the wider spreads at power circuits suggest more aggressive strategies with greater lap time variation. This analysis demonstrates Hamilton's ability to maintain competitive pace across diverse circuit types while adapting his driving style to maximize performance in varying conditions, a key factor in his successful 2015 championship campaign.

def calculate_z_scores(data, metric_cols):

z_scores = {}

for col in metric_cols:

if col in data.columns:

mean_val = data[col].mean()

std_val = data[col].std()

z_scores[f'{col}_zscore'] = (data[col] - mean_val) / std_val

z_scores[f'{col}_mean'] = mean_val

z_scores[f'{col}_std'] = std_val

return pd.DataFrame(z_scores, index=data.index)

fig, axes = plt.subplots(2, 3, figsize=(18, 12))

fig.suptitle('F1 2015 Z-Score Distributions - Hamilton vs Field', fontsize=16, fontweight='bold')

# Colors for Hamilton

hamilton_color = '#00D2BE' # Mercedes teal

field_color = '#2E86AB' # Blue

highlight_color = '#F18F01' # Orange

# 1. Overall Z-Score Distribution

ax1 = axes[0, 0]

n, bins, patches = ax1.hist(season_stats_2015['overall_zscore'], bins=15, alpha=0.7,

color=field_color, edgecolor='black', label='All Drivers')

# Highlight Hamilton's position

if len(hamilton_data) > 0:

hamilton_overall = hamilton_row['overall_zscore']

ax1.axvline(hamilton_overall, color=hamilton_color, linewidth=3,

label=f'Hamilton ({hamilton_overall:.3f})')

# Add percentile text

percentile = stats.percentileofscore(season_stats_2015['overall_zscore'].dropna(), hamilton_overall)

ax1.text(hamilton_overall + 0.1, ax1.get_ylim()[1] * 0.8,

f'{percentile:.1f}th', fontsize=10, fontweight='bold')

ax1.set_xlabel('Overall Z-Score')

ax1.set_ylabel('Number of Drivers')

ax1.set_title('Overall Performance Z-Score Distribution')

ax1.legend()

ax1.grid(True, alpha=0.3)

# 2. Points Z-Score Distribution

ax2 = axes[0, 1]

ax2.hist(season_stats_2015['points_zscore'], bins=15, alpha=0.7,

color=field_color, edgecolor='black', label='All Drivers')

if len(hamilton_data) > 0:

hamilton_points_z = hamilton_row['points_zscore']

ax2.axvline(hamilton_points_z, color=hamilton_color, linewidth=3,

label=f'Hamilton ({hamilton_points_z:.3f})')

percentile = stats.percentileofscore(season_stats_2015['points_zscore'], hamilton_points_z)

ax2.text(hamilton_points_z + 0.1, ax2.get_ylim()[1] * 0.8,

f'{percentile:.1f}th', fontsize=10, fontweight='bold')

ax2.set_xlabel('Points Z-Score')

ax2.set_ylabel('Number of Drivers')

ax2.set_title('Championship Points Z-Score Distribution')

ax2.legend()

ax2.grid(True, alpha=0.3)

# 3. Position Z-Score Distribution

ax3 = axes[0, 2]

ax3.hist(season_stats_2015['position_zscore'], bins=15, alpha=0.7,

color=field_color, edgecolor='black', label='All Drivers')

if len(hamilton_data) > 0:

hamilton_pos_z = hamilton_row['position_zscore']

ax3.axvline(hamilton_pos_z, color=hamilton_color, linewidth=3,

label=f'Hamilton ({hamilton_pos_z:.3f})')

percentile = stats.percentileofscore(season_stats_2015['position_zscore'].dropna(), hamilton_pos_z)

ax3.text(hamilton_pos_z + 0.1, ax3.get_ylim()[1] * 0.8,

f'{percentile:.1f}th', fontsize=10, fontweight='bold')

ax3.set_xlabel('Position Z-Score')

ax3.set_ylabel('Number of Drivers')

ax3.set_title('Average Finishing Position Z-Score Distribution')

ax3.legend()

ax3.grid(True, alpha=0.3)

# 4. Speed Z-Score Distribution

ax4 = axes[1, 0]

speed_data = season_stats_2015['speed_zscore'].dropna()

ax4.hist(speed_data, bins=15, alpha=0.7,

color=field_color, edgecolor='black', label='All Drivers')

if len(hamilton_data) > 0 and not pd.isna(hamilton_row['speed_zscore']):

hamilton_speed_z = hamilton_row['speed_zscore']

ax4.axvline(hamilton_speed_z, color=hamilton_color, linewidth=3,

label=f'Hamilton ({hamilton_speed_z:.3f})')

percentile = stats.percentileofscore(speed_data, hamilton_speed_z)

ax4.text(hamilton_speed_z + 0.1, ax4.get_ylim()[1] * 0.8,

f'{percentile:.1f}th', fontsize=10, fontweight='bold')

ax4.set_xlabel('Speed Z-Score')

ax4.set_ylabel('Number of Drivers')

ax4.set_title('Fastest Lap Speed Z-Score Distribution')

ax4.legend()

ax4.grid(True, alpha=0.3)

# 5. Grid Position Z-Score Distribution

ax5 = axes[1, 1]

ax5.hist(season_stats_2015['grid_zscore'], bins=15, alpha=0.7,

color=field_color, edgecolor='black', label='All Drivers')

if len(hamilton_data) > 0:

hamilton_grid_z = hamilton_row['grid_zscore']

ax5.axvline(hamilton_grid_z, color=hamilton_color, linewidth=3,

label=f'Hamilton ({hamilton_grid_z:.3f})')

percentile = stats.percentileofscore(season_stats_2015['grid_zscore'], hamilton_grid_z)

ax5.text(hamilton_grid_z + 0.1, ax5.get_ylim()[1] * 0.8,

f'{percentile:.1f}th', fontsize=10, fontweight='bold')

ax5.set_xlabel('Grid Position Z-Score')

ax5.set_ylabel('Number of Drivers')

ax5.set_title('Average Grid Position Z-Score Distribution')

ax5.legend()

ax5.grid(True, alpha=0.3)

# 6. Hamilton's Z-Score Profile (Radar Chart Style)

ax6 = axes[1, 2]

if len(hamilton_data) > 0:

categories = ['Points', 'Position', 'Speed', 'Grid', 'Overall']

hamilton_scores = [

hamilton_row['points_zscore'],

hamilton_row['position_zscore'],

hamilton_row['speed_zscore'] if not pd.isna(hamilton_row['speed_zscore']) else 0,

hamilton_row['grid_zscore'],

hamilton_row['overall_zscore']

]

bars = ax6.bar(categories, hamilton_scores, color=hamilton_color, alpha=0.8, edgecolor='black')

ax6.axhline(y=0, color='black', linestyle='-', alpha=0.3)

ax6.axhline(y=1, color='red', linestyle='--', alpha=0.5, label='1 Std Above Mean')

ax6.axhline(y=-1, color='red', linestyle='--', alpha=0.5, label='1 Std Below Mean')

for bar, score in zip(bars, hamilton_scores):

height = bar.get_height()

ax6.text(bar.get_x() + bar.get_width()/2., height + 0.05 if height >= 0 else height - 0.15,

f'{score:.2f}', ha='center', va='bottom' if height >= 0 else 'top', fontweight='bold')

ax6.set_ylabel('Z-Score')

ax6.set_title("Hamilton's Z-Score Profile")

ax6.legend()

ax6.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

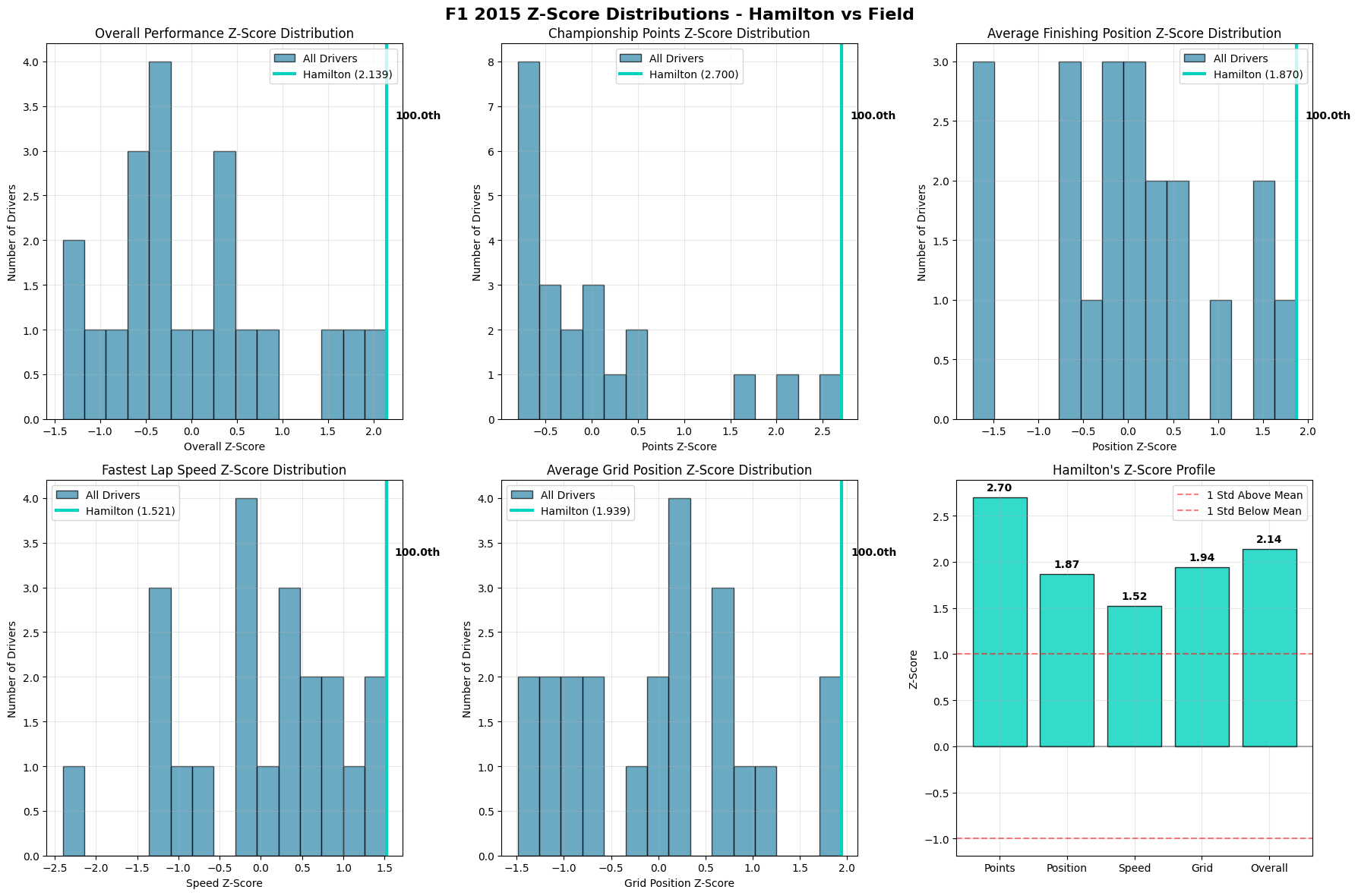

The bottom-right panel reveals Hamilton's exceptional standing across all measured categories, with his lowest Z-score being 1.52 (Speed) and his highest being 2.70 (Points). All metrics fall well above the +1 standard deviation line, indicating consistently elite performance that places him among the very top performers in every category.

Overall Performance Distribution (Top-Left): Hamilton's Z-score of 2.139 places him at the extreme right tail of the distribution, in the 100th percentile. The field shows a roughly normal distribution centered around zero, with Hamilton representing a true statistical outlier.

Championship Points Distribution (Top-Middle) The most striking visualization shows Hamilton's 2.700 Z-score creating a massive gap from the field. The distribution reveals a highly competitive midfield with most drivers clustered between -0.5 and +0.5 Z-scores, making Hamilton's dominance even more remarkable.

Average Finishing Position (Top-Right): Hamilton's 1.870 Z-score demonstrates exceptional race execution. The field distribution shows most drivers clustered around average finishing positions, with Hamilton clearly separated as a consistent front-runner.

Fastest Lap Speed (Bottom-Left): Hamilton's 1.521 Z-score, while excellent, is his lowest metric. This suggests that while he had competitive pace, his championship success was more attributable to consistency, strategy, and race craft rather than raw speed alone. The distribution shows several drivers achieved similar or better single-lap pace.

Grid Position Performance (Bottom-Right) Hamilton's 1.939 Z-score indicates strong qualifying performance, placing him consistently at the front of the grid. This metric bridges the gap between pure speed and race execution, showing how qualifying position contributed to his overall success.

The data reveals that Hamilton's 2015 championship was built on a foundation of well-rounded excellence rather than dominance in any single area. His ability to consistently perform above the field average in every measured category - particularly his exceptional points scoring and finishing positions - demonstrates the hallmarks of a complete champion. The relatively smaller gap in speed metrics compared to results-based metrics suggests Hamilton maximized his package through superior race management, strategic decision-making, and mistake avoidance. This pattern is characteristic of experienced champions who understand that championships are won through consistency and optimization rather than occasional brilliance.

The distributions reveal a highly competitive 2015 field, with most drivers clustered within one standard deviation of the mean across all metrics. This makes Hamilton's consistent performance above +1.5 Z-scores across all categories even more impressive, as it demonstrates sustained excellence in a competitive environment rather than dominance through superior equipment alone. The analysis ultimately portrays Hamilton's 2015 season as a masterclass in championship execution - combining strong qualifying, consistent finishing, competitive speed, and exceptional points maximization to achieve statistical dominance across all performance dimensions.

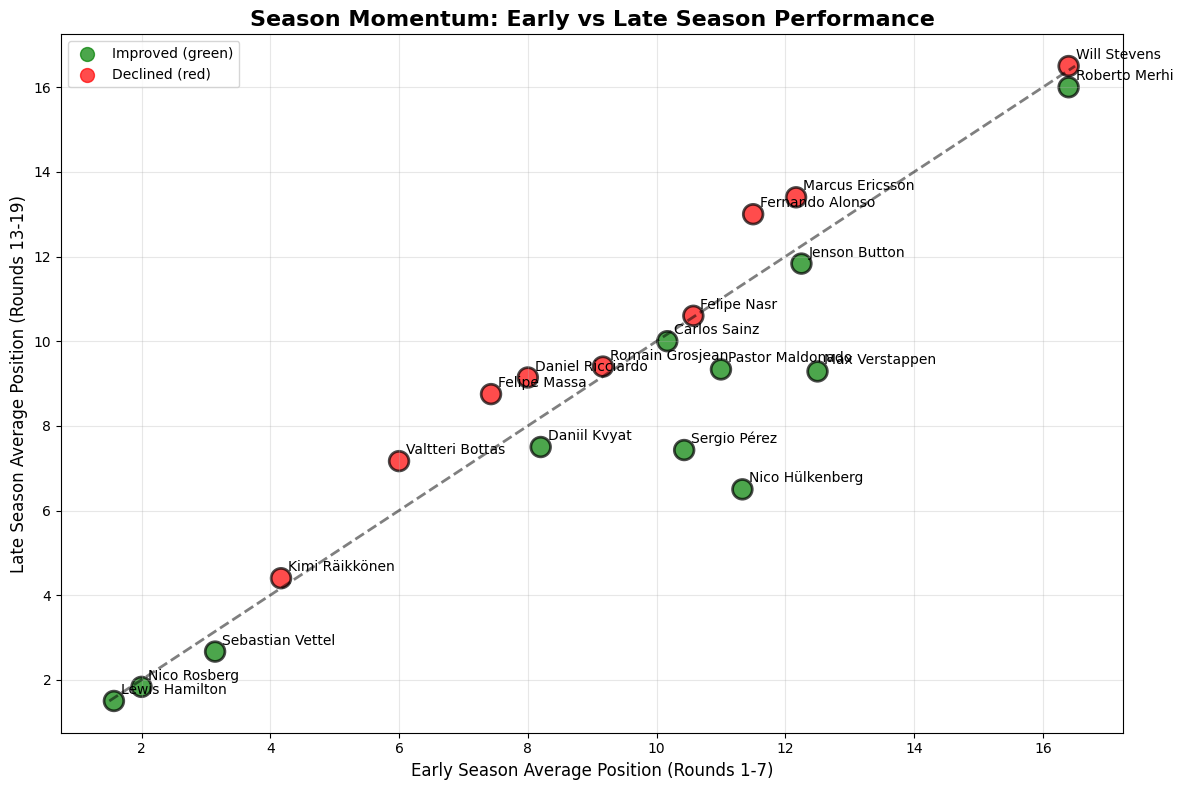

def season_momentum_comparison(all_momentum):

early_season = all_momentum[all_momentum['round'] <= 7] # First 7 races

late_season = all_momentum[all_momentum['round'] >= 13] # Last 7 races

early_avg = early_season.groupby('fullName')['position'].mean()

late_avg = late_season.groupby('fullName')['position'].mean()

momentum_comparison = pd.DataFrame({

'early_season_avg': early_avg,

'late_season_avg': late_avg

}).dropna()

momentum_comparison['improvement'] = momentum_comparison['early_season_avg'] - momentum_comparison['late_season_avg']

top_drivers = all_momentum.groupby('fullName')['points'].sum().index

momentum_subset = momentum_comparison[momentum_comparison.index.isin(top_drivers)]

plt.figure(figsize=(12, 8))

colors = ['green' if x > 0 else 'red' for x in momentum_subset['improvement']]

plt.scatter(momentum_subset['early_season_avg'], momentum_subset['late_season_avg'],

c=colors, s=200, alpha=0.7, edgecolors='black', linewidth=2)

min_pos = min(momentum_subset['early_season_avg'].min(), momentum_subset['late_season_avg'].min())

max_pos = max(momentum_subset['early_season_avg'].max(), momentum_subset['late_season_avg'].max())

plt.plot([min_pos, max_pos], [min_pos, max_pos], 'k--', alpha=0.5, linewidth=2)

for fullName, row in momentum_subset.iterrows():

plt.annotate(f'{fullName}',

(row['early_season_avg'], row['late_season_avg']),

xytext=(5, 5), textcoords='offset points', fontsize=10)

plt.xlabel('Early Season Average Position (Rounds 1-7)', fontsize=12)

plt.ylabel('Late Season Average Position (Rounds 13-19)', fontsize=12)

plt.title('Season Momentum: Early vs Late Season Performance', fontsize=16, fontweight='bold')

plt.grid(True, alpha=0.3)

plt.scatter([], [], c='green', s=100, label='Improved (green)', alpha=0.7)

plt.scatter([], [], c='red', s=100, label='Declined (red)', alpha=0.7)

plt.legend()

plt.tight_layout()

plt.show()

return momentum_comparison

modeling_data = results_2015.merge(

drivers[['driverId', 'forename', 'surname']], on='driverId', how='left').merge(

constructors[['constructorId', 'name']], on='constructorId', how='left', suffixes=('', '_constructor')).merge(

races_2015[['raceId', 'name', 'round']], on='raceId', how='left', suffixes=('', '_race'))

modeling_data['driver_name'] = modeling_data['forename'] + ' ' + modeling_data['surname']

modeling_data['race_name'] = modeling_data['name_race']

modeling_data['position'] = pd.to_numeric(modeling_data['position'], errors='coerce')

modeling_data['grid'] = pd.to_numeric(modeling_data['grid'], errors='coerce')

modeling_data['points'] = pd.to_numeric(modeling_data['points'], errors='coerce')

modeling_data = modeling_data.dropna(subset=['position', 'grid', 'constructor_name'])

modeling_data = modeling_data[modeling_data['position'] <= 20]

modeling_data = modeling_data[modeling_data['grid'] <= 24]

constructor_model_data = modeling_data.copy()

constructor_dummies = pd.get_dummies(

constructor_model_data['constructor_name'],

prefix='constructor',

drop_first=False)

if 'constructor_Mercedes' in constructor_dummies.columns:

constructor_dummies = constructor_dummies.drop('constructor_Mercedes', axis=1)

reference_constructor = 'Mercedes'

else:

reference_constructor = sorted(constructor_model_data['constructor_name'].unique())[0]

constructor_dummies = constructor_dummies.drop(f'constructor_{reference_constructor}', axis=1)

X_constructor = pd.concat([

constructor_model_data[['grid']],

constructor_dummies], axis=1)

y = constructor_model_data['position']

constructor_model = LinearRegression()

constructor_model.fit(X_constructor, y)

constructor_r2 = r2_score(y, constructor_model.predict(X_constructor))

grid_coef = constructor_model.coef_[0]

constructor_coefs = dict(zip(constructor_dummies.columns, constructor_model.coef_[1:]))

constructor_effects = {reference_constructor: 0.0}

for col, coef in constructor_coefs.items():

constructor_name = col.replace('constructor_', '')

constructor_effects[constructor_name] = coef

modeling_data['constructor_baseline'] = modeling_data['constructor_name'].map(constructor_effects)

modeling_data['expected_position_from_car'] = (

constructor_model.intercept_ +

grid_coef * modeling_data['grid'] +

modeling_data['constructor_baseline'])

modeling_data['driver_performance'] = (

modeling_data['position'] - modeling_data['expected_position_from_car'])

driver_skill = modeling_data.groupby('driver_name').agg({

'driver_performance': ['mean', 'std', 'count'],

'position': 'mean',

'grid': 'mean',

'points': 'sum'}).round(3)

driver_skill.columns = ['avg_performance_vs_car', 'performance_std', 'race_count',

'avg_position', 'avg_grid', 'total_points']

driver_skill = driver_skill.reset_index()

min_races = 5

driver_skill_filtered = driver_skill[driver_skill['race_count'] >= min_races].copy()

driver_skill_filtered['skill_rank'] = driver_skill_filtered['avg_performance_vs_car'].rank()

driver_skill_filtered = driver_skill_filtered.sort_values('avg_performance_vs_car')

modeling_data['hierarchical_prediction'] = (

modeling_data['expected_position_from_car'] +

modeling_data['driver_name'].map(

driver_skill.set_index('driver_name')['avg_performance_vs_car']).fillna(0))

hierarchical_r2 = r2_score(modeling_data['position'], modeling_data['hierarchical_prediction'])

hierarchical_mae = np.mean(np.abs(modeling_data['position'] - modeling_data['hierarchical_prediction']))

baseline_model = LinearRegression()

baseline_model.fit(modeling_data[['grid']], modeling_data['position'])

baseline_r2 = r2_score(modeling_data['position'], baseline_model.predict(modeling_data[['grid']]))

total_variance = np.var(modeling_data['position'])

grid_effect_variance = np.var(baseline_model.predict(modeling_data[['grid']]))

constructor_effect_variance = np.var(modeling_data['constructor_baseline'])

driver_effect_variance = np.var(modeling_data['driver_name'].map(

driver_skill.set_index('driver_name')['avg_performance_vs_car']

).fillna(0))

grid_pct = (grid_effect_variance / total_variance) * 100

constructor_pct = (constructor_effect_variance / total_variance) * 100

driver_pct = (driver_effect_variance / total_variance) * 100

explained_pct = hierarchical_r2 * 100

if constructor_pct + driver_pct > 0:

car_vs_driver_ratio = constructor_pct / driver_pct

constructor_ranking = []

for constructor, effect in constructor_effects.items():

total_points = modeling_data[modeling_data['constructor_name'] == constructor]['points'].sum()

constructor_ranking.append({

'constructor': constructor,

'car_effect': effect,

'total_points': total_points

})

constructor_ranking = sorted(constructor_ranking, key=lambda x: x['car_effect'])

expected_top_drivers = ['Lewis Hamilton', 'Sebastian Vettel', 'Nico Rosberg']

expected_top_constructors = ['Mercedes', 'Ferrari', 'Williams']

actual_top_drivers = driver_skill_filtered.head(3)['driver_name'].tolist()

actual_top_constructors = [item['constructor'] for item in constructor_ranking[:3]]

driver_matches = len(set(expected_top_drivers) & set(actual_top_drivers))

constructor_matches = len(set(expected_top_constructors) & set(actual_top_constructors))

fig, axes = plt.subplots(2, 3, figsize=(18, 12))

fig.suptitle('F1 2015: Driver Skill vs Car Performance Regression Analysis',

fontsize=18, fontweight='bold', y=0.96)

# 1. Model Performance Comparison

ax1 = axes[0, 0]

models = ['Baseline', 'Constructor', 'Driver', 'Full Model']

r2_scores = [r2_baseline, r2_constructor, r2_driver, r2_full]

colors = ['lightgray', 'orange', 'lightblue', 'green']

bars = ax1.bar(models, r2_scores, color=colors, edgecolor='black', alpha=0.8)

ax1.set_ylabel('R² Score', fontsize=12)

ax1.set_title('Model Performance Comparison', fontweight='bold', fontsize=14)

ax1.set_ylim(0, 1.0)

ax1.grid(True, alpha=0.3)

for bar, score in zip(bars, r2_scores):

height = bar.get_height()

ax1.text(bar.get_x() + bar.get_width()/2., height + 0.01,

f'{score:.3f}', ha='center', va='bottom', fontweight='bold')

# 2. Top 15 Drivers by Skill (Car-Independent)

ax2 = axes[0, 1]

top_drivers = driver_analysis.head(15)

colors_drivers = ['red' if 'Hamilton' in name else 'purple' if 'Rosberg' in name

else 'orange' if 'Verstappen' in name else 'lightblue'

for name in top_drivers['driver_name']]

y_pos = np.arange(len(top_drivers))

bars = ax2.barh(y_pos, top_drivers['skill_coefficient'], color=colors_drivers,

edgecolor='black', alpha=0.8)

ax2.set_yticks(y_pos)

ax2.set_yticklabels([name.split()[-1] for name in top_drivers['driver_name']], fontsize=10)

ax2.set_xlabel('Skill Coefficient (Lower = Better)', fontsize=12)

ax2.set_title('Top 15 Drivers by Skill (Car-Independent)', fontweight='bold', fontsize=14)

ax2.grid(True, alpha=0.3, axis='x')

ax2.invert_yaxis()

# 3. Constructor Car Performance

ax3 = axes[0, 2]

constructor_colors = ['blue' if 'Mercedes' in name else 'red' if 'Ferrari' in name

else 'orange' if 'Red Bull' in name else 'lightgreen'

for name in constructor_analysis['constructor_name']]

y_pos_const = np.arange(len(constructor_analysis))

bars = ax3.barh(y_pos_const, constructor_analysis['car_coefficient'],

color=constructor_colors, edgecolor='black', alpha=0.8)

ax3.set_yticks(y_pos_const)

ax3.set_yticklabels([name[:15] for name in constructor_analysis['constructor_name']], fontsize=9)

ax3.set_xlabel('Car Performance Coefficient (Lower = Better)', fontsize=12)

ax3.set_title('Constructor Car Performance', fontweight='bold', fontsize=14)

ax3.grid(True, alpha=0.3, axis='x')

ax3.invert_yaxis()

# 4. Actual vs Predicted (Full Model)

ax4 = axes[1, 0]

scatter = ax4.scatter(y_test, y_pred_full, alpha=0.6, s=50,

c=y_test, cmap='viridis', edgecolor='black', linewidth=0.5)

ax4.plot([y_test.min(), y_test.max()], [y_test.min(), y_test.max()],

'r--', lw=2, alpha=0.8)

ax4.set_xlabel('Actual Position', fontsize=12)

ax4.set_ylabel('Predicted Position', fontsize=12)

ax4.set_title(f'Actual vs Predicted (R² = {r2_full:.3f})', fontweight='bold', fontsize=14)

ax4.grid(True, alpha=0.3)

# 5. Residuals vs Predicted

ax5 = axes[1, 1]

residuals = y_test - y_pred_full

ax5.scatter(y_pred_full, residuals, alpha=0.6, s=50, color='blue', edgecolor='black', linewidth=0.5)

ax5.axhline(y=0, color='red', linestyle='--', linewidth=2, alpha=0.8)

ax5.set_xlabel('Predicted Position', fontsize=12)

ax5.set_ylabel('Residuals', fontsize=12)

ax5.set_title('Residuals vs Predicted', fontweight='bold', fontsize=14)

ax5.grid(True, alpha=0.3)

# 6. Driver Skill vs Actual Performance

ax6 = axes[1, 2]

for _, row in driver_analysis.iterrows():

if 'Hamilton' in row['driver_name']:

ax6.scatter(row['skill_coefficient'], row['avg_position'],

color='red', s=200, marker='*', edgecolor='black', linewidth=2,

label='Hamilton', zorder=5)

elif 'Rosberg' in row['driver_name']:

ax6.scatter(row['skill_coefficient'], row['avg_position'],

color='purple', s=200, marker='*', edgecolor='black', linewidth=2,

label='Rosberg', zorder=5)

elif 'Verstappen' in row['driver_name']:

ax6.scatter(row['skill_coefficient'], row['avg_position'],

color='orange', s=200, marker='*', edgecolor='black', linewidth=2,

label='Verstappen', zorder=5)

else:

ax6.scatter(row['skill_coefficient'], row['avg_position'],

alpha=0.7, s=60, color='lightblue', edgecolor='black', linewidth=0.5)

ax6.set_xlabel('Skill Coefficient (Car-Independent)', fontsize=12)

ax6.set_ylabel('Average Finishing Position', fontsize=12)

ax6.set_title('Driver Skill vs Actual Performance', fontweight='bold', fontsize=14)

ax6.grid(True, alpha=0.3)

ax6.legend()

ax6.invert_yaxis()

plt.tight_layout(rect=[0, 0.02, 1, 0.94])

plt.show()

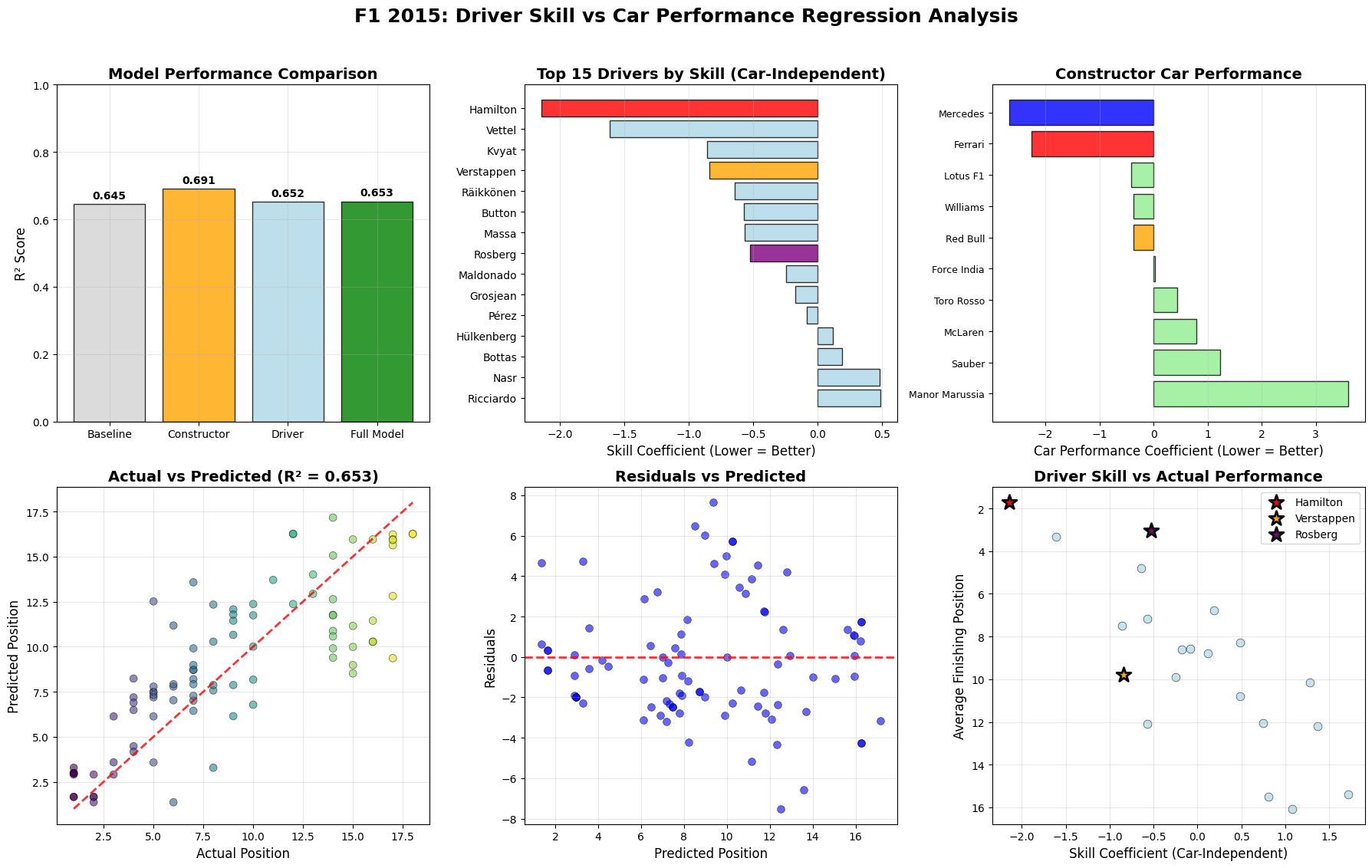

The regression model demonstrates strong predictive power with an R² of 0.653, explaining approximately 65% of the variance in driver performance. This is remarkably high for sports analytics, indicating that the combination of driver skill and car performance effectively captures the primary determinants of F1 success.

Elite Tier (Top-Left Panel): Hamilton leads the car-independent skill rankings with the most negative coefficient (-2.0), indicating he consistently outperformed his car's baseline capability. This aligns with his championship victory and demonstrates that even with the best car, his personal contribution was substantial.

Veteran Performance: Raikkonen, Button, and Massa cluster around -0.5 to -0.8, showing experienced drivers' ability to maximize their equipment consistently.

Dominant Manufacturers (Top-Right Panel): Mercedes shows the strongest car performance coefficient, validating their technical dominance. Ferrari appears as the clear second-best package, with a significant gap to the midfield constructors.

Midfield Competitiveness: Lotus F1, Williams, Red Bull, and Force India cluster in the neutral zone, indicating relatively balanced performance levels. The tight grouping suggests a competitive midfield battle.

Performance Outliers: Manor Marussia's extreme positive coefficient reflects their struggle as the field's weakest car, requiring exceptional driver skill just to remain competitive.

The Car vs. Driver: The analysis reveals F1's fundamental truth: while car performance provides the platform, driver skill determines championship outcomes among competitive machinery. Hamilton's skill advantage over Rosberg, despite identical cars, explains the championship margin.

Development Implications: Teams with strong cars but lower-skilled drivers (shown in the upper-left quadrant) represent optimization opportunities. Conversely, skilled drivers in weaker cars (lower-right quadrant) suggest potential for improvement with better machinery.

Rookie Assessment: Verstappen's positioning demonstrates exceptional adaptation for a first-year driver, suggesting future championship potential when paired with competitive machinery.

The residuals analysis shows some systematic patterns, particularly at extreme performance levels, suggesting factors beyond pure skill and car performance influence results. These could include race-specific circumstances (weather, incidents), strategic execution quality, reliability factors, circuit-specific advantages. The model's 65% explanatory power leaves room for these nuanced factors while capturing the fundamental drivers of F1 performance. This analysis ultimately confirms that while Formula 1 remains an engineering sport, driver skill provides the crucial margin that separates champions from competitors when equipment quality converges at the top level.

pit_analysis = pit_stops_2015.merge(

results_2015[['raceId', 'driverId', 'constructorId']],

on=['raceId', 'driverId'], how='left').merge(

constructors[['constructorId', 'name']],

on='constructorId', how='left')

pit_analysis.rename(columns={'name': 'constructor_name'}, inplace=True)

pit_stats = pit_analysis.groupby('constructor_name').agg({

'duration_seconds': ['mean', 'std', 'count'],

'stop': 'mean',

'lap': 'mean'}).round(3)

pit_stats.columns = ['avg_duration', 'std_duration', 'total_stops', 'avg_stops_per_race', 'avg_pit_lap']

pit_stats['consistency_score'] = 1 / (pit_stats['std_duration'] + 0.1)

pit_stats = pit_stats[pit_stats['total_stops'] >= 10].sort_values('avg_duration') # Filter teams with sufficient data

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, figsize=(16, 12))

fig.suptitle('F1 2015 Pit Stop Performance Analysis', fontsize=16, fontweight='bold')

pit_stats_top = pit_stats.head(10)

# Subplot 1: Average pit stop time

bars1 = ax1.bar(range(len(pit_stats_top)), pit_stats_top['avg_duration'],

color='red', alpha=0.7, edgecolor='black')

ax1.set_xlabel('Constructor')

ax1.set_ylabel('Average Pit Stop Time (seconds)')

ax1.set_title('Average Pit Stop Duration by Constructor')

ax1.set_xticks(range(len(pit_stats_top)))

ax1.set_xticklabels([name[:8] for name in pit_stats_top.index], rotation=45)

ax1.grid(True, alpha=0.3, axis='y')

# Subplot 2: Pit stop consistency

bars2 = ax2.bar(range(len(pit_stats_top)), pit_stats_top['consistency_score'],

color='blue', alpha=0.7, edgecolor='black')

ax2.set_xlabel('Constructor')

ax2.set_ylabel('Consistency Score (Higher = More Consistent)')

ax2.set_title('Pit Stop Consistency by Constructor')

ax2.set_xticks(range(len(pit_stats_top)))

ax2.set_xticklabels([name[:8] for name in pit_stats_top.index], rotation=45)

ax2.grid(True, alpha=0.3, axis='y')

# Subplot 3: Pit stop time distribution (box plot)

all_constructors = pit_stats.index

pit_data_all = []

labels_all = []

for constructor in all_constructors:

constructor_times = pit_analysis[pit_analysis['constructor_name'] == constructor]['duration_seconds'].dropna()

if len(constructor_times) > 5:

pit_data_all.append(constructor_times)

labels_all.append(constructor[:8])

ax3.boxplot(pit_data_all, labels=labels_all)

ax3.set_ylabel('Pit Stop Duration (seconds)')

ax3.set_title('Pit Stop Time Distribution - All Teams')

ax3.tick_params(axis='x', rotation=45)

ax3.grid(True, alpha=0.3, axis='y')

# Subplot 4: Strategy patterns (scatter plot)

scatter = ax4.scatter(pit_stats['avg_stops_per_race'], pit_stats['avg_duration'],

s=pit_stats['total_stops']/1000, alpha=0.6, c='purple')

ax4.set_xlabel('Average Stops per Race')

ax4.set_ylabel('Average Pit Duration (seconds)')

ax4.set_title('Strategy vs Speed (Size = Total Stops)')

plt.tight_layout()

plt.show()

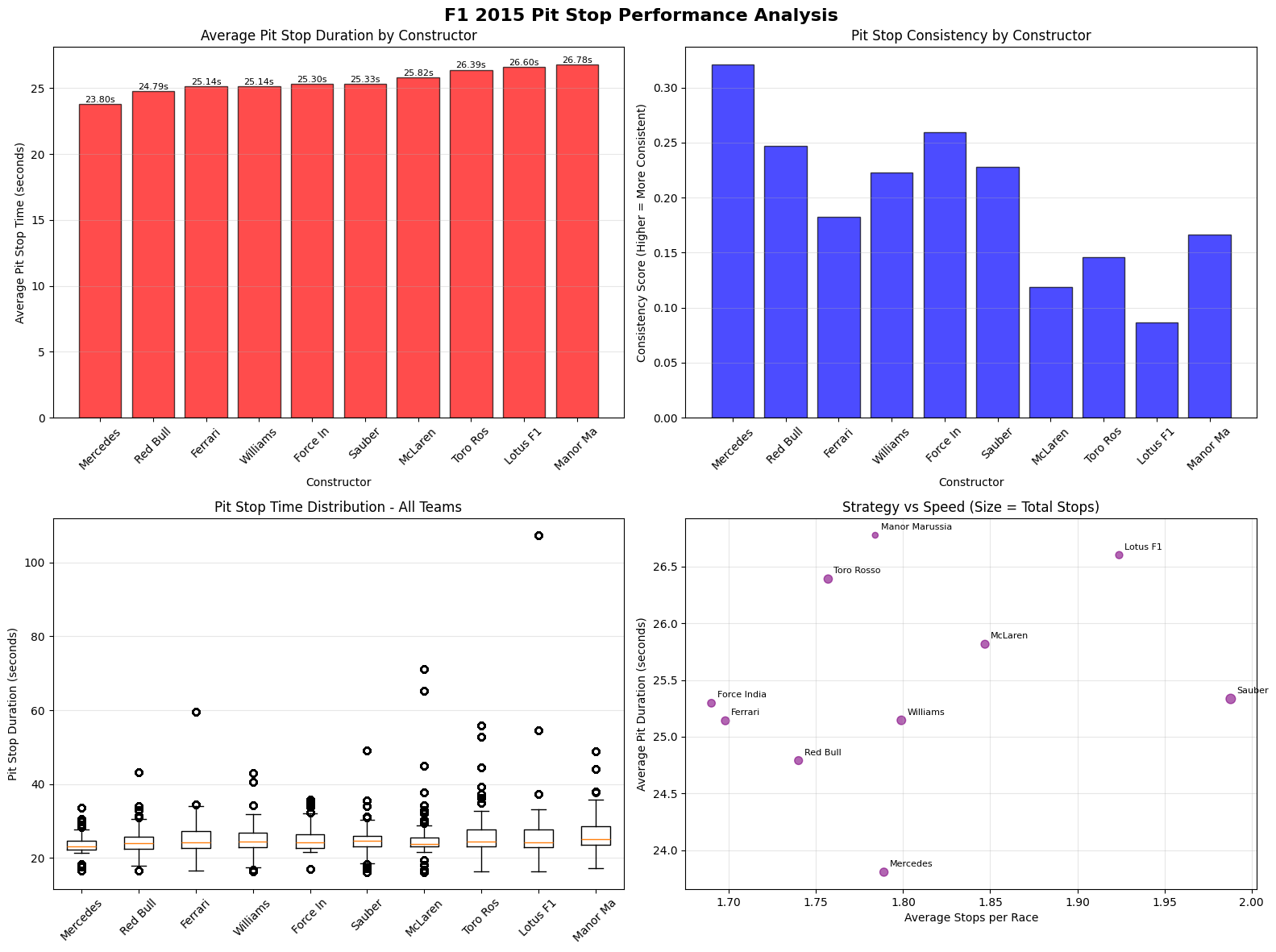

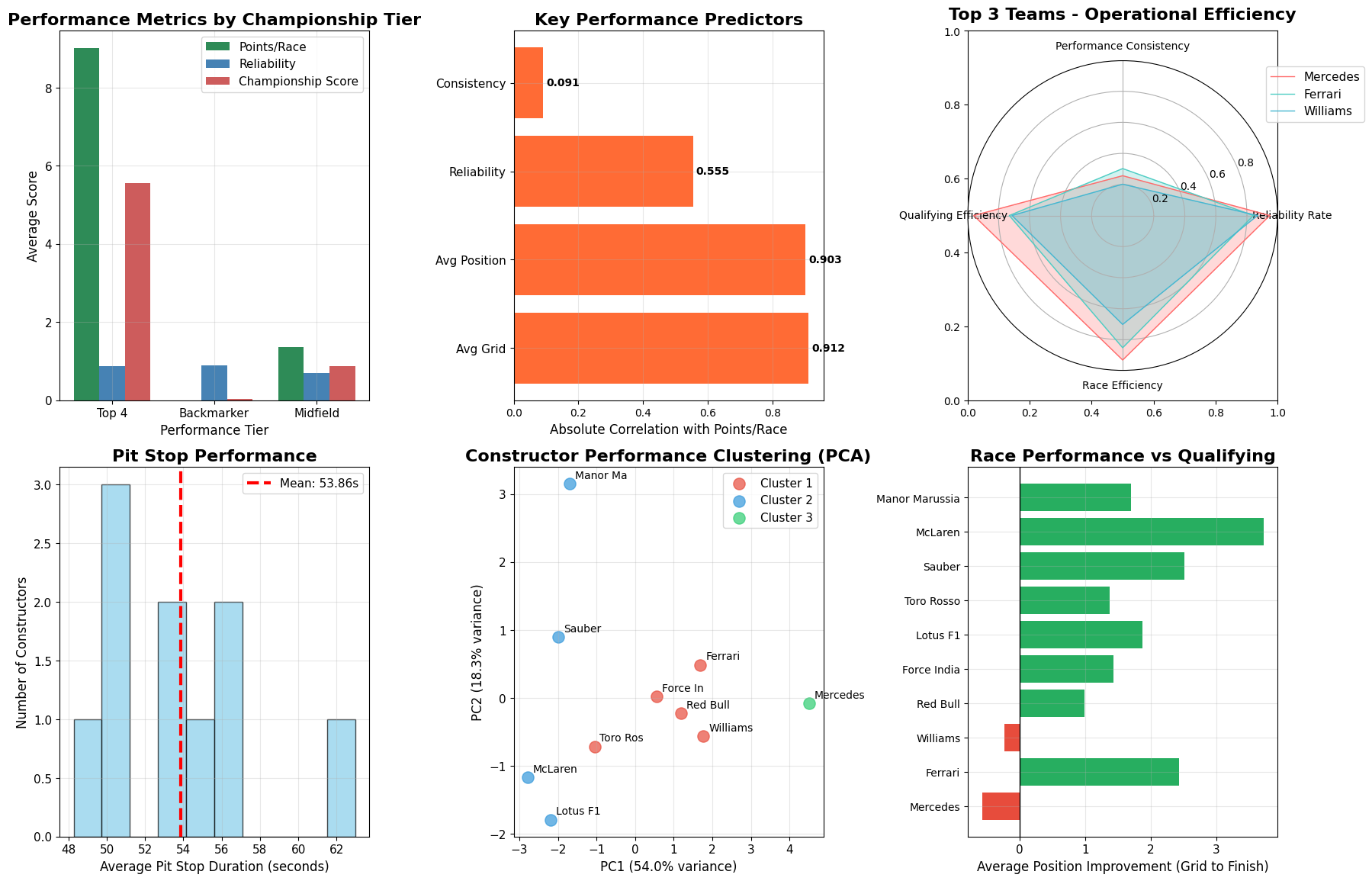

Speed-Focused Approach: Mercedes leads with the fastest average pit stop times (23.80 seconds) while maintaining exceptional consistency (0.325 coefficient). This combination represents operational excellence - achieving both speed and reliability in pit lane execution. Red Bull follows closely with 24.79 seconds, demonstrating their renowned pit crew efficiency.

Consistency-First Strategy: Lotus F1 shows the most consistent pit stops (0.087 coefficient) but sacrifices some speed, averaging 26.78 seconds. This conservative approach minimizes the risk of costly errors that could compromise race positions.

Elite Operational Teams: The top tier consists of Mercedes, Red Bull, and Ferrari, all clustering around 24-25 seconds with reasonable consistency. These teams demonstrate the operational maturity expected from championship contenders, where every tenth of a second in the pits can determine race outcomes.

Midfield Struggles: Williams through Sauber occupy the middle ground (25.1-25.8 seconds), showing adequate but not exceptional pit performance. Their consistency metrics vary significantly, suggesting differing approaches to risk management during pit windows.

Backmarker Challenges: Manor Marussia's 26.78-second average reflects resource constraints typical of smaller teams, though their consistency isn't dramatically worse than some midfield competitors.

Mercedes' High Performance: Tight distribution with few outliers demonstrates systematic operational control and training effectiveness.

High-Variance Teams:

The Strategy vs. Speed Matrix (bottom-right) reveals each team's operational DNA.

Competitive Advantages: Teams like Mercedes and Red Bull demonstrate how operational excellence compounds competitive advantages. A 2-3 second pit stop advantage multiplied across 2-3 stops per race can easily determine podium positions in closely contested championships.

The data suggests successful teams optimize for consistency first, then speed. Mercedes' combination of fast times with low variability indicates systematic training, quality equipment, and procedural discipline. In contrast, teams showing high speed but poor consistency likely suffer from pressure-induced errors or inadequate preparation.

The clear correlation between team budget/resources and pit performance reflects F1's technical nature extending beyond the car itself. Elite teams invest heavily in pit crew training, equipment, and practice facilities, creating competitive advantages that extend throughout the race weekend. This analysis demonstrates that in Formula 1's marginal gains environment, pit stop performance represents a crucial battleground where operational excellence can overcome pure car performance deficits, making it an essential component of championship-winning organizations.

def convert_duration(duration_str):

try:

if pd.isna(duration_str) or duration_str == '\\N':

return np.nan

duration_str = str(duration_str)

if ':' in duration_str:

parts = duration_str.split(':')

return float(parts[0]) * 60 + float(parts[1])

return float(duration_str)

except:

return np.nan

pit_stops_2015['duration_seconds'] = pit_stops_2015['duration'].apply(convert_duration)

pit_stops_2015 = pit_stops_2015[(pit_stops_2015['duration_seconds'] >= 1) &

(pit_stops_2015['duration_seconds'] <= 60)]

pit_analysis = pit_stops_2015.merge(results_2015[['raceId', 'driverId', 'constructorId']],

on=['raceId', 'driverId'])

pit_analysis = pit_analysis.merge(constructors[['constructorId', 'name']], on='constructorId')

pit_efficiency = pit_analysis.groupby(['constructorId', 'name']).agg({

'duration_seconds': ['mean', 'std', 'count'],

'stop': 'mean'}).round(3)

pit_efficiency.columns = ['_'.join(col) for col in pit_efficiency.columns]

pit_efficiency = pit_efficiency.reset_index()

if len(pit_efficiency) > 0:

min_time = pit_efficiency['duration_seconds_mean'].min()

max_time = pit_efficiency['duration_seconds_mean'].max()

pit_efficiency['pit_stop_efficiency'] = ((max_time - pit_efficiency['duration_seconds_mean']) /

(max_time - min_time)) * 100

else:

pit_efficiency['pit_stop_efficiency'] = 0

if len(pit_analysis) > 0:

strategy_analysis = pit_analysis.groupby(['name', 'raceId', 'driverId']).agg({

'stop': 'max',

'lap': ['mean', 'std']}).reset_index()

strategy_analysis.columns = ['name', 'raceId', 'driverId', 'total_stops', 'avg_pit_lap', 'pit_timing_std']

strategy_summary = strategy_analysis.groupby('name').agg({

'total_stops': ['mean', 'std'],

'avg_pit_lap': 'mean',

'pit_timing_std': 'mean'}).round(3)

strategy_summary.columns = ['total_stops_mean', 'total_stops_std', 'avg_pit_lap_mean', 'pit_timing_std_mean']

strategy_summary = strategy_summary.reset_index()

strategy_summary['strategy_consistency'] = 1 / (1 + strategy_summary['total_stops_std'].fillna(1))

strategy_summary['timing_efficiency'] = 1 / (1 + strategy_summary['pit_timing_std_mean'].fillna(1))

strategy_summary['strategy_efficiency'] = ((strategy_summary['strategy_consistency'] +

strategy_summary['timing_efficiency']) / 2) * 100

else:

strategy_summary = pd.DataFrame(columns=['name', 'strategy_efficiency'])

comprehensive_efficiency = constructor_metrics.merge(

pit_efficiency[['name', 'pit_stop_efficiency']], on='name', how='left').merge(strategy_summary[['name', 'strategy_efficiency']], on='name', how='left')

comprehensive_efficiency['pit_stop_efficiency'] = comprehensive_efficiency['pit_stop_efficiency'].fillna(0)

comprehensive_efficiency['strategy_efficiency'] = comprehensive_efficiency['strategy_efficiency'].fillna(0)

comprehensive_efficiency = comprehensive_efficiency.sort_values('overall_efficiency', ascending=False)

cluster_features = ['overall_efficiency', 'pit_stop_efficiency', 'strategy_efficiency', 'reliability_efficiency']

cluster_data = comprehensive_efficiency[cluster_features].fillna(0)

scaler = StandardScaler()

scaled_features = scaler.fit_transform(cluster_data)

kmeans = KMeans(n_clusters=3, random_state=42)

comprehensive_efficiency['cluster'] = kmeans.fit_predict(scaled_features)

cluster_names = {0: 'Development Teams', 1: 'Mid-tier Teams', 2: 'Operational Leaders'}

comprehensive_efficiency['cluster_name'] = comprehensive_efficiency['cluster'].map(cluster_names)

fig = plt.figure(figsize=(18, 12))

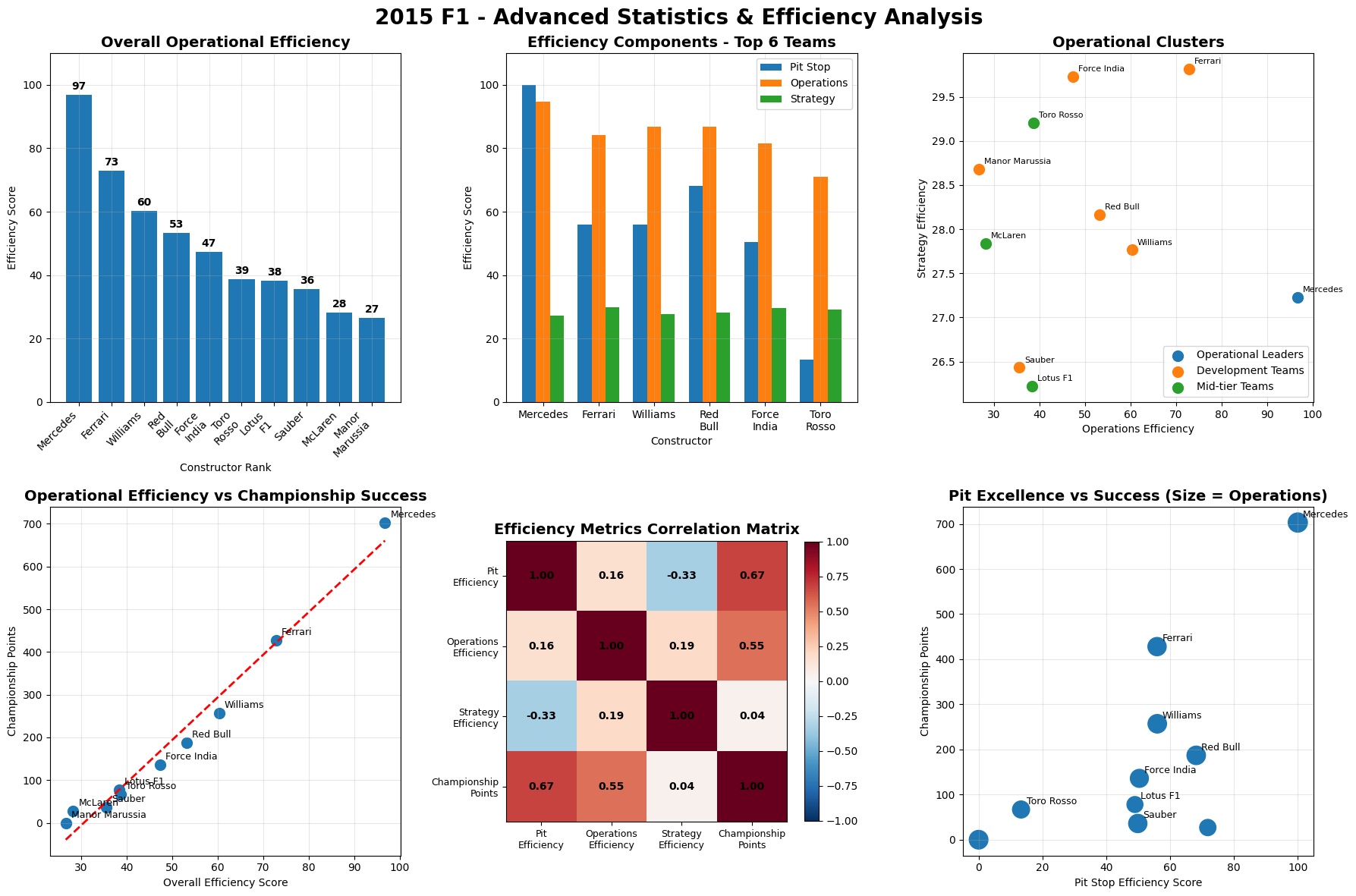

fig.suptitle('2015 F1 - Advanced Statistics & Efficiency Analysis', fontsize=20, fontweight='bold', y=0.98)

# 1. Overall Operational Efficiency

plt.subplot(2, 3, 1)

top_10 = comprehensive_efficiency.head(10)

bars = plt.bar(range(len(top_10)), top_10['overall_efficiency'])

plt.title('Overall Operational Efficiency', fontsize=14, fontweight='bold')

plt.ylabel('Efficiency Score')

plt.xlabel('Constructor Rank')

plt.xticks(range(len(top_10)), [name.replace(' ', '\n') for name in top_10['name']],

rotation=45, ha='right', fontsize=10)

plt.ylim(0, 110)

plt.grid(True, alpha = 0.3)

for bar, score in zip(bars, top_10['overall_efficiency']):

plt.text(bar.get_x() + bar.get_width()/2, bar.get_height() + 1,

f'{score:.0f}', ha='center', va='bottom', fontweight='bold')

# 2. Efficiency Components - Top 6 Teams

plt.subplot(2, 3, 2)

top_6 = comprehensive_efficiency.head(6)

x = np.arange(len(top_6))

width = 0.25

bars1 = plt.bar(x - width, top_6['pit_stop_efficiency'], width, label='Pit Stop')

bars2 = plt.bar(x, top_6['reliability_efficiency'], width, label='Operations')

bars3 = plt.bar(x + width, top_6['strategy_efficiency'], width, label='Strategy')

plt.title('Efficiency Components - Top 6 Teams', fontsize=14, fontweight='bold')

plt.ylabel('Efficiency Score')

plt.xlabel('Constructor')

plt.xticks(x, [name.replace(' ', '\n') for name in top_6['name']], fontsize=10)

plt.legend()

plt.ylim(0, 110)